El Carro: The Oracle Operator for Kubernetes

Run Oracle on Kubernetes with El Carro

El Carro is a new project that offers a way to run Oracle databases in Kubernetes as a portable, open source, community driven, no vendor lock-in container orchestration system. El Carro provides a powerful declarative API for comprehensive and consistent configuration and deployment as well as for real-time operations and monitoring.

High Level Overview

El Carro helps you with the deployment and management of Oracle database software in Kubernetes. You must have appropriate licensing rights to allow you to use it with El Carro (BYOL).

With the current release, you download the El Carro installation bundle, stage the Oracle installation software, create a containerized database image (with or without a seed database), and then create an Instance (known as CDB in Oracle parlance) and add one or more Databases (known as PDBs).

After the El Carro Instance and Database(s) are created, you can take snapshot-based or RMAN-based backups and get basic monitoring and logging information. Additional database services will be added in future releases.

License Notice

You can use El Carro to automatically provision and manage Oracle Database Express Edition (XE) or Oracle Database Enterprise Edition (EE). In each case, it is your responsibility to ensure that you have appropriate licenses to use any such Oracle software with El Carro.

Please also note that each El Carro “database” will create a pluggable database, which may require licensing of the Oracle Multitenant option.

Oracle and Java are registered trademarks of Oracle and/or its affiliates. Other names may be trademarks of their respective owners.

Quickstart

We recommend starting with the quickstart first, but as you become more familiar with El Carro, consider trying more advanced features by following the user guides linked below.

If you have a valid license for Oracle 12c EE and would like to get your Oracle database up and running on Kubernetes, you can follow this quickstart guide.

As an alternative to Oracle 12c EE, you can use Oracle 18c XE which is free to use by following the quickstart guide for Oracle 18c XE instead.

If you prefer to run El Carro locally on your personal computer, you can follow the user guide for Oracle on minikube.

Preparation

To prepare the El Carro download and deployment, follow this guide.

Provisioning

El Carro helps you to easily create, scale, and delete Oracle databases.

Firstly, you need to create a containerized database image.

You can optionally create a default Config to set namespace-wide defaults for configuring your databases, following this guide.

Then you can create Instances (known as CDBs in Oracle parlance), following this guide. Afterward, create Databases (known as PDBs) and users following this guide.

Backup and Recovery

El Carro provides both storage snapshot based backup/restore and Oracle native RMAN based backup/restore features to support your database backup and recovery strategy.

After the El Carro Instance and Database(s) are created, you can create storage snapshot based backups, following this guide.

You can also create Oracle native RMAN based backups, following this guide.

To restore from a backup, follow this guide.

Data Import & Export

El Carro provides data import/export features based on Oracle Data Pump.

To import data to your El Carro database, follow this guide.

To export data from your El Carro database, follow this guide.

What's More?

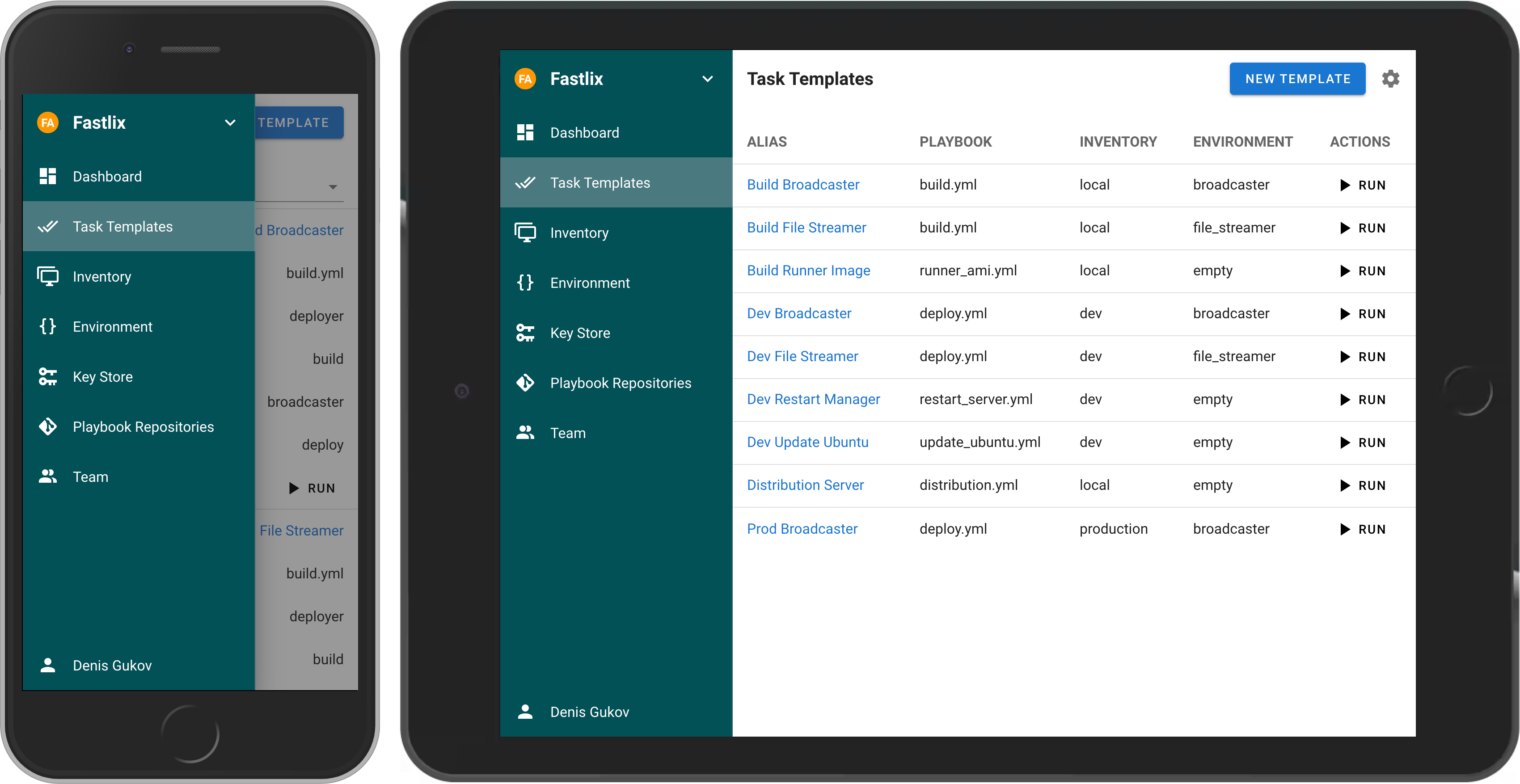

There are more features supported by El Carro and more to be added soon! For more information, check logging, monitoring, connectivity, UI, etc.

Contributing

You're very welcome to contribute to the El Carro Project!

We've put together a set of contributing and development guidelines that you can review in this guide.

Support

To report a bug or log a feature request, please open a GitHub issue and follow the guidelines for submitting a bug.

For general questions or community support, we welcome you to join the El Carro community mailing list and ask your question there.