Crawlab

中文 | English

Installation | Run | Screenshot | Architecture | Integration | Compare | Community & Sponsorship | CHANGELOG | Disclaimer

Golang-based distributed web crawler management platform, supporting various languages including Python, NodeJS, Go, Java, PHP and various web crawler frameworks including Scrapy, Puppeteer, Selenium.

Installation

Three methods:

- Docker (Recommended)

- Direct Deploy (Check Internal Kernel)

- Kubernetes (Multi-Node Deployment)

Pre-requisite (Docker)

- Docker 18.03+

- Redis 5.x+

- MongoDB 3.6+

- Docker Compose 1.24+ (optional but recommended)

Pre-requisite (Direct Deploy)

- Go 1.12+

- Node 8.12+

- Redis 5.x+

- MongoDB 3.6+

Quick Start

Please open the command line prompt and execute the command below. Make sure you have installed docker-compose in advance.

git clone https://github.com/crawlab-team/crawlab

cd crawlab

docker-compose up -d

Next, you can look into the docker-compose.yml (with detailed config params) and the Documentation (Chinese) for further information.

Run

Docker

Please use docker-compose to one-click to start up. By doing so, you don't even have to configure MongoDB and Redis databases. Create a file named docker-compose.yml and input the code below.

version: '3.3'

services:

master:

image: tikazyq/crawlab:latest

container_name: master

environment:

CRAWLAB_SERVER_MASTER: "Y"

CRAWLAB_MONGO_HOST: "mongo"

CRAWLAB_REDIS_ADDRESS: "redis"

ports:

- "8080:8080"

depends_on:

- mongo

- redis

mongo:

image: mongo:latest

restart: always

ports:

- "27017:27017"

redis:

image: redis:latest

restart: always

ports:

- "6379:6379"

Then execute the command below, and Crawlab Master Node + MongoDB + Redis will start up. Open the browser and enter http://localhost:8080 to see the UI interface.

docker-compose up

For Docker Deployment details, please refer to relevant documentation.

Screenshot

Login

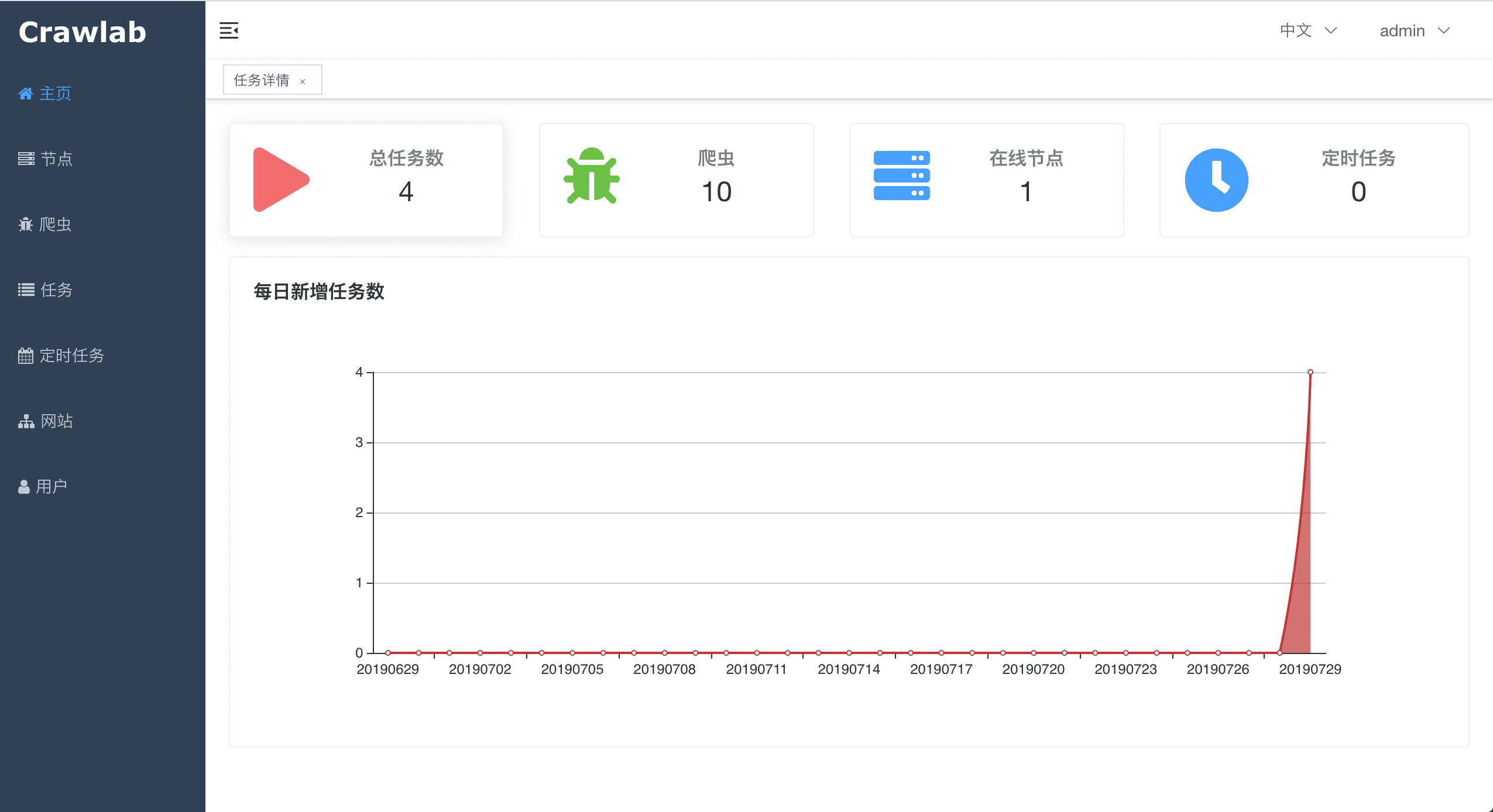

Home Page

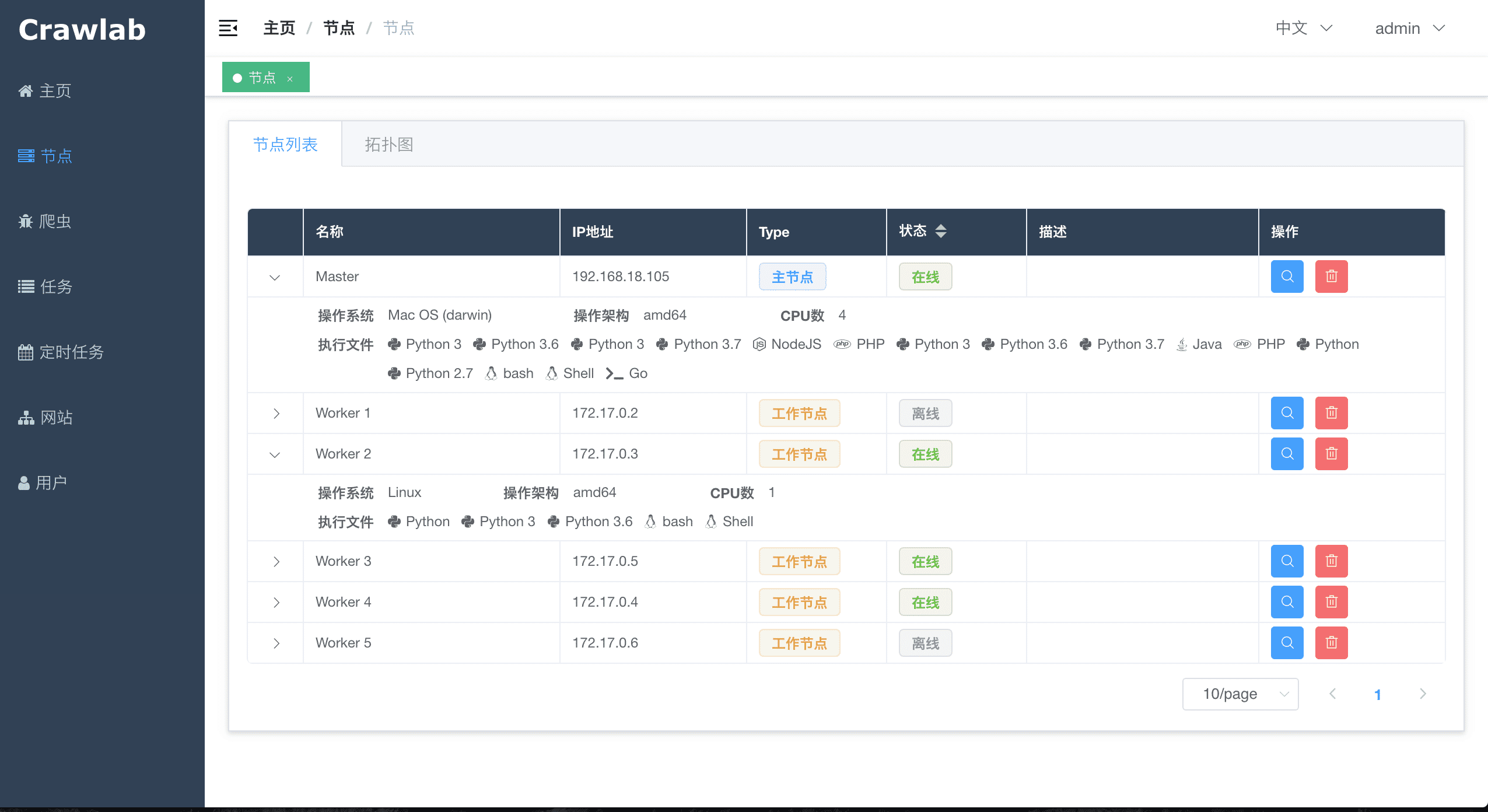

Node List

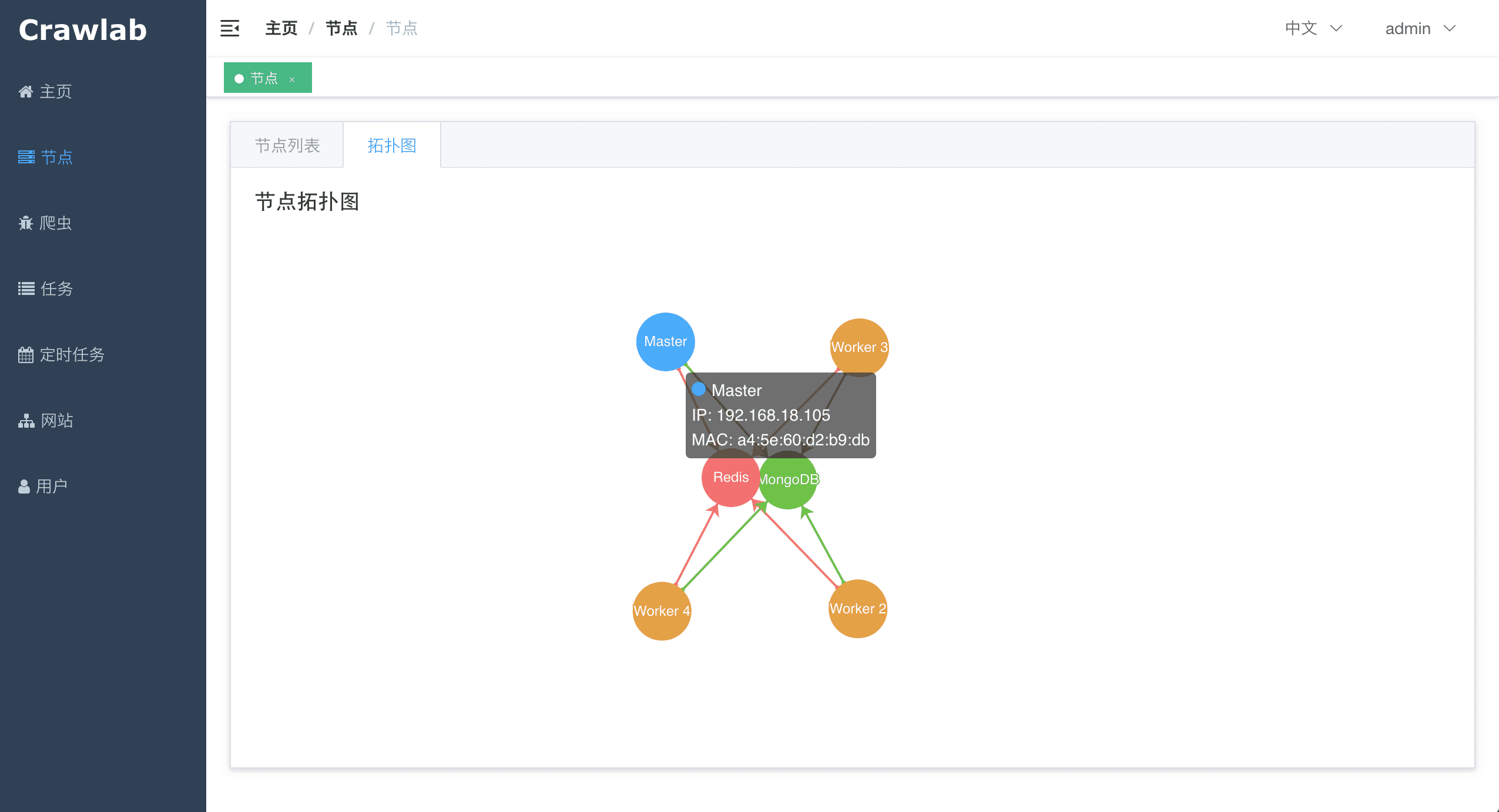

Node Network

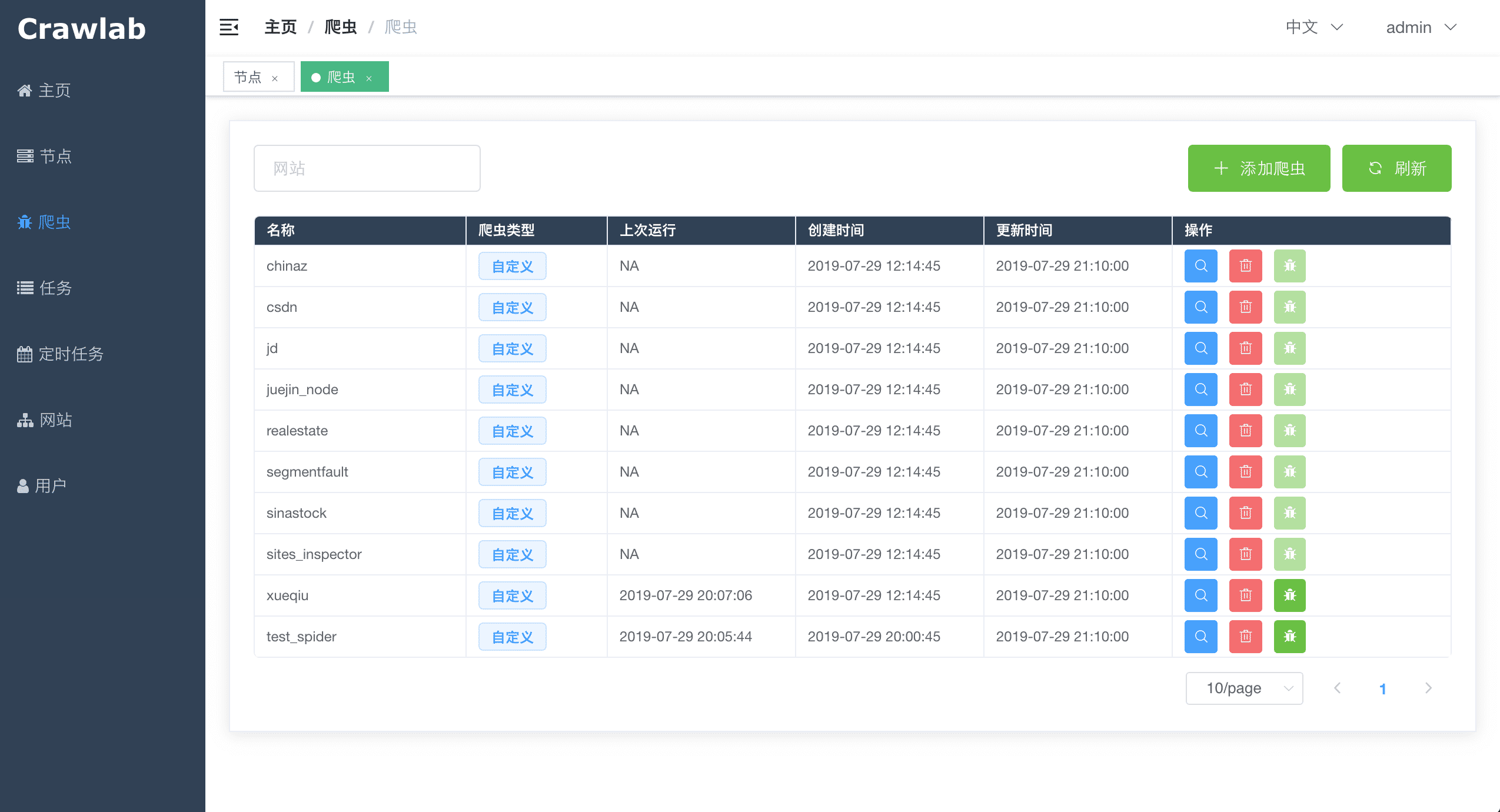

Spider List

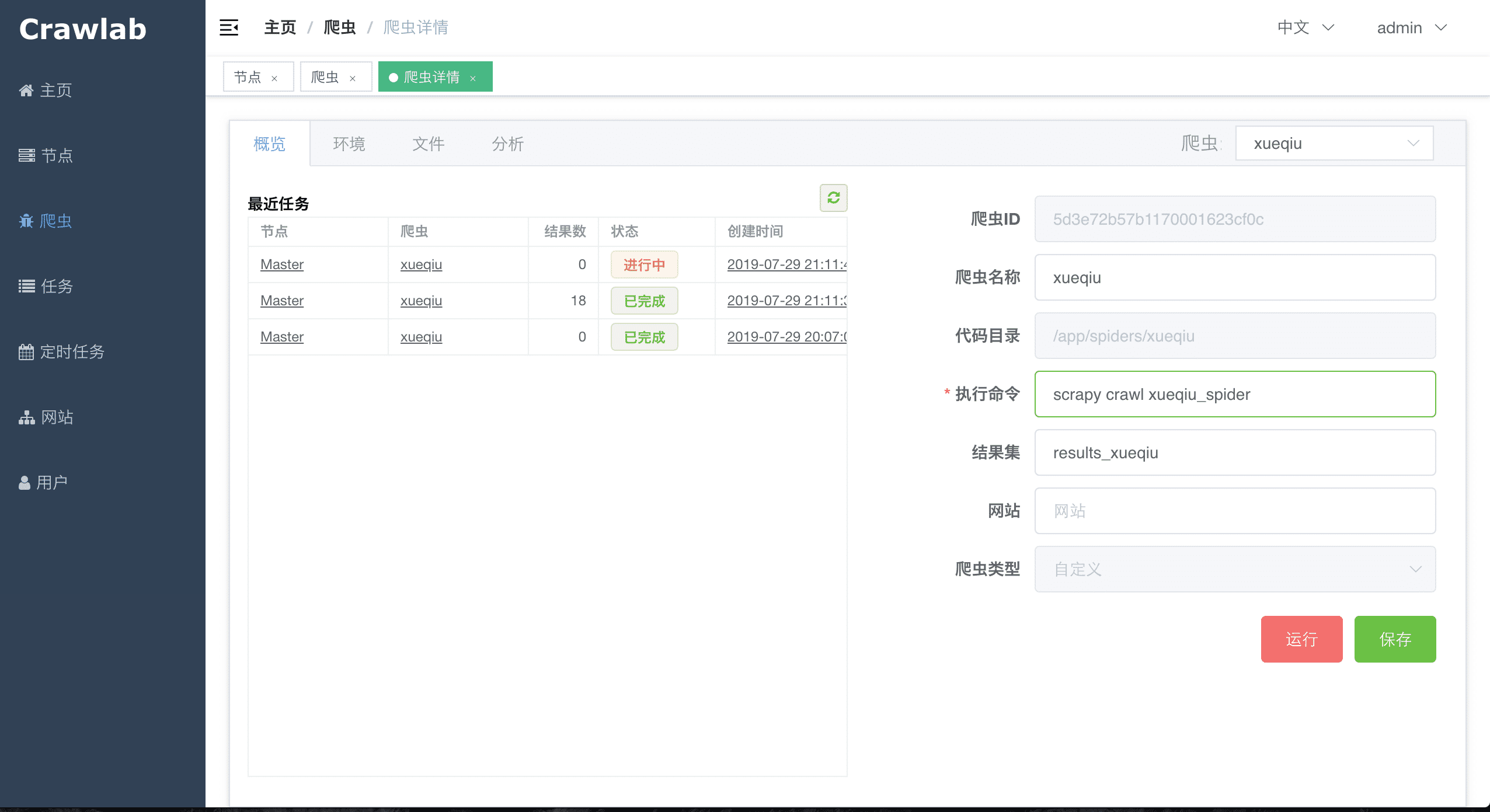

Spider Overview

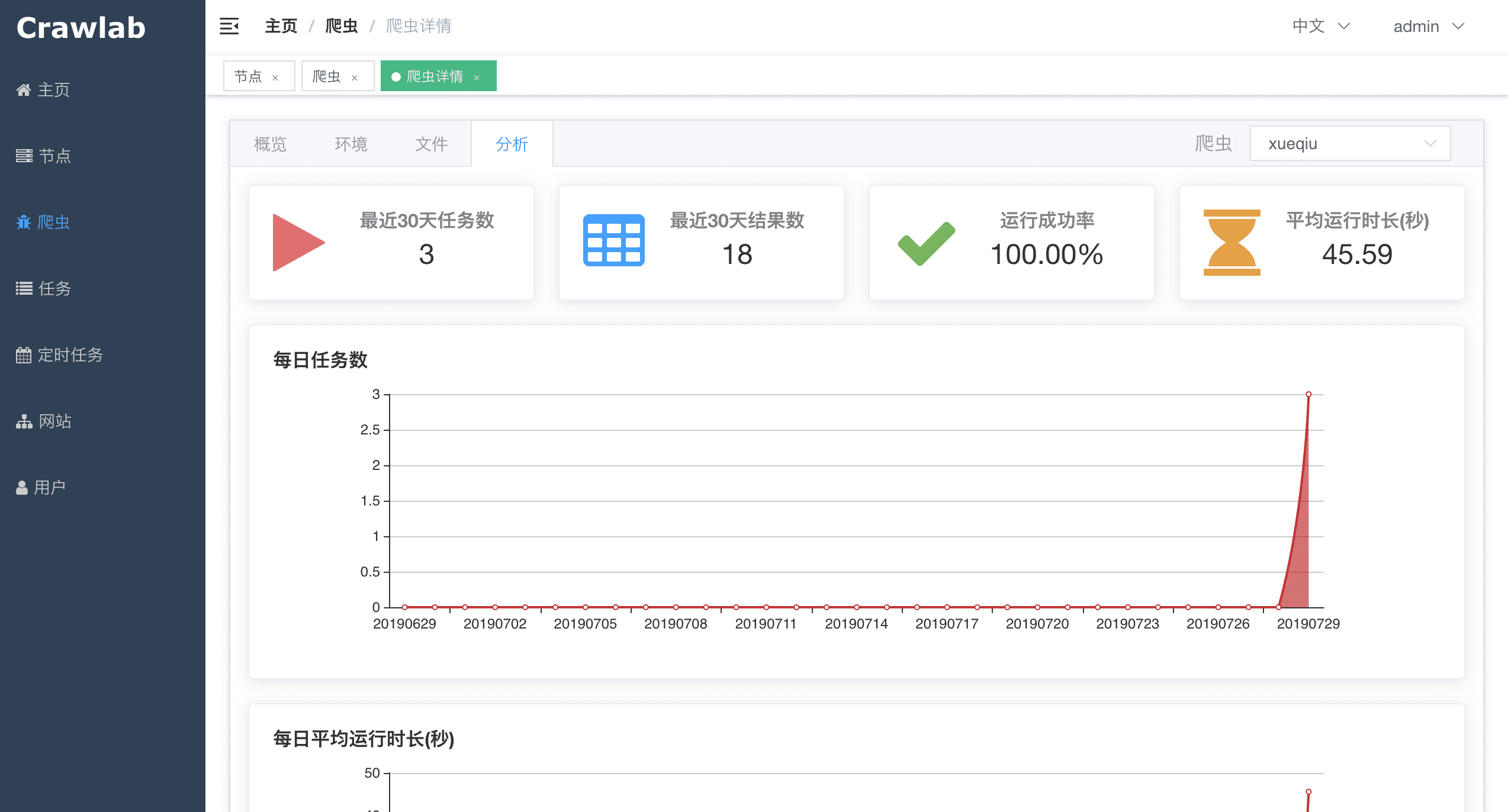

Spider Analytics

Spider File Edit

Task Log

Task Results

Cron Job

Language Installation

Dependency Installation

Notifications

Architecture

The architecture of Crawlab is consisted of the Master Node and multiple Worker Nodes, and Redis and MongoDB databases which are mainly for nodes communication and data storage.

The frontend app makes requests to the Master Node, which assigns tasks and deploys spiders through MongoDB and Redis. When a Worker Node receives a task, it begins to execute the crawling task, and stores the results to MongoDB. The architecture is much more concise compared with versions before v0.3.0. It has removed unnecessary Flower module which offers node monitoring services. They are now done by Redis.

Master Node

The Master Node is the core of the Crawlab architecture. It is the center control system of Crawlab.

The Master Node offers below services:

- Crawling Task Coordination;

- Worker Node Management and Communication;

- Spider Deployment;

- Frontend and API Services;

- Task Execution (one can regard the Master Node as a Worker Node)

The Master Node communicates with the frontend app, and send crawling tasks to Worker Nodes. In the mean time, the Master Node synchronizes (deploys) spiders to Worker Nodes, via Redis and MongoDB GridFS.

Worker Node

The main functionality of the Worker Nodes is to execute crawling tasks and store results and logs, and communicate with the Master Node through Redis PubSub. By increasing the number of Worker Nodes, Crawlab can scale horizontally, and different crawling tasks can be assigned to different nodes to execute.

MongoDB

MongoDB is the operational database of Crawlab. It stores data of nodes, spiders, tasks, schedules, etc. The MongoDB GridFS file system is the medium for the Master Node to store spider files and synchronize to the Worker Nodes.

Redis

Redis is a very popular Key-Value database. It offers node communication services in Crawlab. For example, nodes will execute HSET to set their info into a hash list named nodes in Redis, and the Master Node will identify online nodes according to the hash list.

Frontend

Frontend is a SPA based on Vue-Element-Admin. It has re-used many Element-UI components to support corresponding display.

Integration with Other Frameworks

Crawlab SDK provides some helper methods to make it easier for you to integrate your spiders into Crawlab, e.g. saving results.

crawlab-sdk using pip.

Scrapy

In settings.py in your Scrapy project, find the variable named ITEM_PIPELINES (a dict variable). Add content below.

ITEM_PIPELINES = {

'crawlab.pipelines.CrawlabMongoPipeline': 888,

}

Then, start the Scrapy spider. After it's done, you should be able to see scraped results in Task Detail -> Result

General Python Spider

Please add below content to your spider files to save results.

# import result saving method

from crawlab import save_item

# this is a result record, must be dict type

result = {'name': 'crawlab'}

# call result saving method

save_item(result)

Then, start the spider. After it's done, you should be able to see scraped results in Task Detail -> Result

Other Frameworks / Languages

A crawling task is actually executed through a shell command. The Task ID will be passed to the crawling task process in the form of environment variable named CRAWLAB_TASK_ID. By doing so, the data can be related to a task. Also, another environment variable CRAWLAB_COLLECTION is passed by Crawlab as the name of the collection to store results data.

Comparison with Other Frameworks

There are existing spider management frameworks. So why use Crawlab?

The reason is that most of the existing platforms are depending on Scrapyd, which limits the choice only within python and scrapy. Surely scrapy is a great web crawl framework, but it cannot do everything.

Crawlab is easy to use, general enough to adapt spiders in any language and any framework. It has also a beautiful frontend interface for users to manage spiders much more easily.

| Framework | Technology | Pros | Cons | Github Stats |

|---|---|---|---|---|

| Crawlab | Golang + Vue | Not limited to Scrapy, available for all programming languages and frameworks. Beautiful UI interface. Naturally support distributed spiders. Support spider management, task management, cron job, result export, analytics, notifications, configurable spiders, online code editor, etc. | Not yet support spider versioning |   |

| ScrapydWeb | Python Flask + Vue | Beautiful UI interface, built-in Scrapy log parser, stats and graphs for task execution, support node management, cron job, mail notification, mobile. Full-feature spider management platform. | Not support spiders other than Scrapy. Limited performance because of Python Flask backend. |   |

| Gerapy | Python Django + Vue | Gerapy is built by web crawler guru Germey Cui. Simple installation and deployment. Beautiful UI interface. Support node management, code edit, configurable crawl rules, etc. | Again not support spiders other than Scrapy. A lot of bugs based on user feedback in v1.0. Look forward to improvement in v2.0 |   |

| SpiderKeeper | Python Flask | Open-source Scrapyhub. Concise and simple UI interface. Support cron job. | Perhaps too simplified, not support pagination, not support node management, not support spiders other than Scrapy. |   |

Contributors

Community & Sponsorship

If you feel Crawlab could benefit your daily work or your company, please add the author's Wechat account noting "Crawlab" to enter the discussion group. Or you scan the Alipay QR code below to give us a reward to upgrade our teamwork software or buy a coffee.