What happened:

Background:

Windows recently enhanced its Windows Subsystem for Linux (WSL2) so that it fully supports systemd. Docker, virtualization in general, and also Kubernetes clusters such as kind, minikube, microk8s work with it now. I therefore tested out of curiosity whether KubeEdge works now out of the box with WSL2 after enabling systemd.

Situation:

I successfully can join WSL2 machines to my K8s cluster as edge nodes and they are also in Ready state.

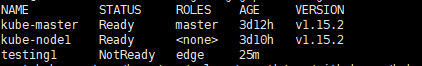

$ kubectl get nodes

bagel Ready control-plane,master 57d v1.22.0

mammut Ready agent,edge 114m v1.22.6-kubeedge-v1.11.1

However the pods will get stuck in ContainerCannotRun status after scheduling. For every pod there's almost the same error message of following structure:

Reason: ContainerCannotRun

Message: xyz is mounted on xyz but it is not a shared or slave mount

This is a example description of a pod that is stuck in state ContainerCannotRun (expand)

k describe nginx-main-575658f585-9n2wz

error: the server doesn't have a resource type "nginx-main-575658f585-9n2wz"

➜ ~ kubectl describe pod nginx-main-575658f585-9n2wz

Name: nginx-main-575658f585-9n2wz

Namespace: default

Priority: 0

Service Account: default

Node: mammut/172.19.86.74

Start Time: Tue, 03 Jan 2023 17:22:03 +0100

Labels: app=nginx-main

pod-template-hash=575658f585

Annotations: <none>

Status: Running

IP: 172.17.0.7

IPs:

IP: 172.17.0.7

Controlled By: ReplicaSet/nginx-main-575658f585

Containers:

nginx:

Container ID: docker://2053e6a2203c95f057169908e1cadcb536f176e1f68d327eafd4b3e7aef460c2

Image: nginx

Image ID: docker-pullable://nginx@sha256:0047b729188a15da49380d9506d65959cce6d40291ccfb4e039f5dc7efd33286

Port: <none>

Host Port: <none>

State: Terminated

Reason: ContainerCannotRun

Message: path /var/lib/edged/pods/5e3a126c-b834-48cb-90d9-77d9fdd0c0e1/volumes/kubernetes.io~projected/kube-api-access-jzfvz is mounted on /var/lib/edged/pods/5e3a126c-b834-48cb-90d9-77d9fdd0c0e1/volumes/kubernetes.io~projected/kube-api-access-jzfvz but it is not a shared or slave mount

Exit Code: 128

Started: Tue, 03 Jan 2023 17:57:54 +0100

Finished: Tue, 03 Jan 2023 17:57:54 +0100

Last State: Terminated

Reason: ContainerCannotRun

Message: path /var/lib/edged/pods/5e3a126c-b834-48cb-90d9-77d9fdd0c0e1/volumes/kubernetes.io~projected/kube-api-access-jzfvz is mounted on /var/lib/edged/pods/5e3a126c-b834-48cb-90d9-77d9fdd0c0e1/volumes/kubernetes.io~projected/kube-api-access-jzfvz but it is not a shared or slave mount

Exit Code: 128

Started: Tue, 03 Jan 2023 17:57:54 +0100

Finished: Tue, 03 Jan 2023 17:57:54 +0100

Ready: False

Restart Count: 11

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-jzfvz (ro)

Conditions:

Type Status

Initialized True

Ready False

PodScheduled True

Volumes:

kube-api-access-jzfvz:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: ip=172.19.86.74

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 39m default-scheduler Successfully assigned default/nginx-main-575658f585-9n2wz to mammut

What you expected to happen:

I expect the pods to turn into Running state.

How to reproduce it (as minimally and precisely as possible):

Setup:

- Windows 11 machine

- enable WSL and Hyper-V in the Windows Registry (

Activate Windows Features -> tick Hyper-V/Hyper-V-Platform and Windows-Subsystem for Linux

- Install WSL either via the Microsoft Store or manually and update to newest WSL version to get systemd support

- Edit: /etc/wsl.conf

[boot]

systemd=true

- restart WSL (full shutdown of WSL via

wsl --shutdown from Powershell and start WSL)

- install all kinds of tools (docker, kubectl, keadm, etc.)

- setup K8s cluster on another machine

Anything else we need to know?:

I run a K8s cluster with version 1.22.0 with KubeEdge 1.11.1.

I guess there's an rather easy fix to this, as K8s in general works on WSL2 now. I used the Helm Chart to deploy the KubeEdge Cloudcore. I'm not even sure if there are any changes required to KubeEdge or if its just networking related stuff. I'm willing to work on this issue if it's a rather easy fix and someone knows potential reasons for this error. It would be great however to discuss this in detail.

By default the IP address of the WSL machine is not the same as the IP from Windows!

journalctl logs (expand)

ion: Sync:true MessageType:} Router:{Source:edged Destination: Group:meta Operation:query Resource:longhorn-system/secret/longhorn-grpc-tls} Content:<nil>}], resp[{Header:{ID: ParentID: Timestamp:0 ResourceVer

sion: Sync:false MessageType:} Router:{Source: Destination: Group: Operation: Resource:} Content:<nil>}], err[timeout to get response for message 4265af07-91e4-4a1c-a3d2-2593fe9076b6]

Jan 03 18:53:57 mammut edgecore[47191]: E0103 18:53:57.559320 47191 process.go:298] remote query failed: timeout to get response for message 4265af07-91e4-4a1c-a3d2-2593fe9076b6

Jan 03 18:53:57 mammut edgecore[47191]: W0103 18:53:57.559365 47191 context_channel.go:159] Get bad anonName: when sendresp message, do nothing

Jan 03 18:53:57 mammut edgecore[47191]: E0103 18:53:57.559416 47191 metaclient.go:112] send sync message longhorn-system/secret/longhorn-grpc-tls failed, error:timeout to get response for message 85a58ef0-34

c0-49e3-b090-45c94c0ba189, retries: 2

Jan 03 18:53:57 mammut edgecore[47191]: E0103 18:53:57.559480 47191 secret.go:195] Couldn't get secret longhorn-system/longhorn-grpc-tls: get secret from metaManager failed, err: timed out waiting for the co

ndition

Jan 03 18:53:57 mammut edgecore[47191]: I0103 18:53:57.559527 47191 record.go:24] Warning FailedMount MountVolume.SetUp failed for volume "longhorn-grpc-tls" : get secret from metaManager failed, err: timed

out waiting for the condition

Jan 03 18:53:57 mammut edgecore[47191]: E0103 18:53:57.559598 47191 nestedpendingoperations.go:335] Operation for "{volumeName:kubernetes.io/secret/c7ee4d3d-85c6-4cd4-bbbf-5c05b1b0a554-longhorn-grpc-tls podName:c7ee4d3d-85c6-4cd4-bbbf-5c05b1b0a554 nodeName:}" failed. No retries permitted until 2023-01-03 18:55:59.559556778 +0100 CET m=+6423.745431357 (durationBeforeRetry 2m2s). Error: MountVolume.SetUp failed for volume "longhorn-grpc-tls" (UniqueName: "kubernetes.io/secret/c7ee4d3d-85c6-4cd4-bbbf-5c05b1b0a554-longhorn-grpc-tls") pod "longhorn-manager-tvhj8" (UID: "c7ee4d3d-85c6-4cd4-bbbf-5c05b1b0a554") : get secret from metaManager failed, err: timed out waiting for the condition

Jan 03 18:53:57 mammut edgecore[47191]: I0103 18:53:57.603567 47191 edged.go:992] worker [3] get pod addition item [edgemesh-agent-ht6mq]

Jan 03 18:53:57 mammut edgecore[47191]: E0103 18:53:57.603593 47191 edged.go:995] consume pod addition backoff: Back-off consume pod [edgemesh-agent-ht6mq] addition error, backoff: [5m0s]

Jan 03 18:53:57 mammut edgecore[47191]: I0103 18:53:57.603605 47191 edged.go:997] worker [3] backoff pod addition item [edgemesh-agent-ht6mq] failed, re-add to queue

Jan 03 18:53:57 mammut edgecore[47191]: I0103 18:53:57.605996 47191 edged.go:992] worker [2] get pod addition item [nginx-main-575658f585-9n2wz]

Jan 03 18:53:57 mammut edgecore[47191]: E0103 18:53:57.606017 47191 edged.go:995] consume pod addition backoff: Back-off consume pod [nginx-main-575658f585-9n2wz] addition error, backoff: [5m0s]

Jan 03 18:53:57 mammut edgecore[47191]: I0103 18:53:57.606036 47191 edged.go:997] worker [2] backoff pod addition item [nginx-main-575658f585-9n2wz] failed, re-add to queue

Jan 03 18:53:57 mammut edgecore[47191]: I0103 18:53:57.638294 47191 edged.go:992] worker [4] get pod addition item [edgemesh-agent-ht6mq]

Jan 03 18:53:57 mammut edgecore[47191]: E0103 18:53:57.638341 47191 edged.go:995] consume pod addition backoff: Back-off consume pod [edgemesh-agent-ht6mq] addition error, backoff: [5m0s]

Jan 03 18:53:57 mammut edgecore[47191]: I0103 18:53:57.638354 47191 edged.go:997] worker [4] backoff pod addition item [edgemesh-agent-ht6mq] failed, re-add to queue

Jan 03 18:53:57 mammut edgecore[47191]: I0103 18:53:57.967442 47191 edged.go:992] worker [0] get pod addition item [edgemesh-agent-ht6mq]

Jan 03 18:53:57 mammut edgecore[47191]: E0103 18:53:57.967552 47191 edged.go:995] consume pod addition backoff: Back-off consume pod [edgemesh-agent-ht6mq] addition error, backoff: [5m0s]

Jan 03 18:53:57 mammut edgecore[47191]: I0103 18:53:57.967642 47191 edged.go:997] worker [0] backoff pod addition item [edgemesh-agent-ht6mq] failed, re-add to queue

Jan 03 18:53:57 mammut edgecore[47191]: I0103 18:53:57.973585 47191 edged.go:992] worker [1] get pod addition item [nginx-main-575658f585-9n2wz]

Jan 03 18:53:57 mammut edgecore[47191]: E0103 18:53:57.973699 47191 edged.go:995] consume pod addition backoff: Back-off consume pod [nginx-main-575658f585-9n2wz] addition error, backoff: [5m0s]

Jan 03 18:53:57 mammut edgecore[47191]: I0103 18:53:57.973797 47191 edged.go:997] worker [1] backoff pod addition item [nginx-main-575658f585-9n2wz] failed, re-add to queue

Jan 03 18:53:58 mammut edgecore[47191]: I0103 18:53:58.037770 47191 edged.go:992] worker [3] get pod addition item [edgemesh-agent-ht6mq]

Jan 03 18:53:58 mammut edgecore[47191]: E0103 18:53:58.037794 47191 edged.go:995] consume pod addition backoff: Back-off consume pod [edgemesh-agent-ht6mq] addition error, backoff: [5m0s]

Jan 03 18:53:58 mammut edgecore[47191]: I0103 18:53:58.037805 47191 edged.go:997] worker [3] backoff pod addition item [edgemesh-agent-ht6mq] failed, re-add to queue

Jan 03 18:53:58 mammut edgecore[47191]: W0103 18:53:58.127940 47191 context_channel.go:159] Get bad anonName:3568dcf8-c100-46b7-b502-527e64c73954 when sendresp message, do nothing

Jan 03 18:53:58 mammut edgecore[47191]: I0103 18:53:58.344926 47191 edged.go:992] worker [2] get pod addition item [edgemesh-agent-ht6mq]

Jan 03 18:53:58 mammut edgecore[47191]: E0103 18:53:58.344960 47191 edged.go:995] consume pod addition backoff: Back-off consume pod [edgemesh-agent-ht6mq] addition error, backoff: [5m0s]

Jan 03 18:53:58 mammut edgecore[47191]: I0103 18:53:58.344980 47191 edged.go:997] worker [2] backoff pod addition item [edgemesh-agent-ht6mq] failed, re-add to queue

Jan 03 18:53:58 mammut edgecore[47191]: I0103 18:53:58.349313 47191 edged.go:992] worker [4] get pod addition item [nginx-main-575658f585-9n2wz]

Jan 03 18:53:58 mammut edgecore[47191]: E0103 18:53:58.349333 47191 edged.go:995] consume pod addition backoff: Back-off consume pod [nginx-main-575658f585-9n2wz] addition error, backoff: [5m0s]

Jan 03 18:53:58 mammut edgecore[47191]: I0103 18:53:58.349341 47191 edged.go:997] worker [4] backoff pod addition item [nginx-main-575658f585-9n2wz] failed, re-add to queue

Jan 03 18:53:58 mammut edgecore[47191]: I0103 18:53:58.379335 47191 edged.go:992] worker [0] get pod addition item [edgemesh-agent-ht6mq]

Jan 03 18:53:58 mammut edgecore[47191]: E0103 18:53:58.379360 47191 edged.go:995] consume pod addition backoff: Back-off consume pod [edgemesh-agent-ht6mq] addition error, backoff: [5m0s]

Jan 03 18:53:58 mammut edgecore[47191]: I0103 18:53:58.379370 47191 edged.go:997] worker [0] backoff pod addition item [edgemesh-agent-ht6mq] failed, re-add to queue

Jan 03 18:53:58 mammut edgecore[47191]: I0103 18:53:58.569273 47191 edged.go:992] worker [1] get pod addition item [nginx-main-575658f585-9n2wz]

Jan 03 18:53:58 mammut edgecore[47191]: E0103 18:53:58.569324 47191 edged.go:995] consume pod addition backoff: Back-off consume pod [nginx-main-575658f585-9n2wz] addition error, backoff: [5m0s]

Jan 03 18:53:58 mammut edgecore[47191]: I0103 18:53:58.569338 47191 edged.go:997] worker [1] backoff pod addition item [nginx-main-575658f585-9n2wz] failed, re-add to queue

Jan 03 18:53:58 mammut edgecore[47191]: I0103 18:53:58.768206 47191 edged.go:992] worker [3] get pod addition item [edgemesh-agent-ht6mq]

Jan 03 18:53:58 mammut edgecore[47191]: E0103 18:53:58.768249 47191 edged.go:995] consume pod addition backoff: Back-off consume pod [edgemesh-agent-ht6mq] addition error, backoff: [5m0s]

Jan 03 18:53:58 mammut edgecore[47191]: I0103 18:53:58.768283 47191 edged.go:997] worker [3] backoff pod addition item [edgemesh-agent-ht6mq] failed, re-add to queue

Jan 03 18:53:58 mammut edgecore[47191]: I0103 18:53:58.788259 47191 edged.go:992] worker [2] get pod addition item [edgemesh-agent-ht6mq]

Jan 03 18:53:58 mammut edgecore[47191]: E0103 18:53:58.788287 47191 edged.go:995] consume pod addition backoff: Back-off consume pod [edgemesh-agent-ht6mq] addition error, backoff: [5m0s]

Jan 03 18:53:58 mammut edgecore[47191]: I0103 18:53:58.788301 47191 edged.go:997] worker [2] backoff pod addition item [edgemesh-agent-ht6mq] failed, re-add to queue

Jan 03 18:53:58 mammut edgecore[47191]: I0103 18:53:58.794728 47191 edged.go:992] worker [4] get pod addition item [nginx-main-575658f585-9n2wz]

Jan 03 18:53:58 mammut edgecore[47191]: E0103 18:53:58.794752 47191 edged.go:995] consume pod addition backoff: Back-off consume pod [nginx-main-575658f585-9n2wz] addition error, backoff: [5m0s]

Jan 03 18:53:58 mammut edgecore[47191]: I0103 18:53:58.794779 47191 edged.go:997] worker [4] backoff pod addition item [nginx-main-575658f585-9n2wz] failed, re-add to queue

Jan 03 18:53:58 mammut edgecore[47191]: I0103 18:53:58.972275 47191 edged.go:992] worker [0] get pod addition item [nginx-main-575658f585-9n2wz]

Jan 03 18:53:58 mammut edgecore[47191]: E0103 18:53:58.972313 47191 edged.go:995] consume pod addition backoff: Back-off consume pod [nginx-main-575658f585-9n2wz] addition error, backoff: [5m0s]

Environment:

NAME="Ubuntu"

VERSION="20.04.3 LTS (Focal Fossa)"

ID=ubuntu

ID_LIKE=debian

PRETTY_NAME="Ubuntu 20.04.3 LTS"

VERSION_ID="20.04"

HOME_URL="https://www.ubuntu.com/"

SUPPORT_URL="https://help.ubuntu.com/"

BUG_REPORT_URL="https://bugs.launchpad.net/ubuntu/"

PRIVACY_POLICY_URL="https://www.ubuntu.com/legal/terms-and-policies/privacy-policy"

VERSION_CODENAME=focal

UBUNTU_CODENAME=focal

Linux mammut 5.15.79.1-microsoft-standard-WSL2 #1 SMP Wed Nov 23 01:01:46 UTC 2022 x86_64 x86_64 x86_64 GNU/Linux

- Go version (e.g.

go version):

- Others:

wsl --version

WSL version: 1.0.3.0

Kernel version: 5.15.79.1

WSLg version: 1.0.47

MSRDC version: 1.2.3575

Direct3D version: 1.606.4

DXCore version: 10.0.25131.1002-220531-1700.rs-onecore-base2-hyp

Windows version: 10.0.22621.963

Grafana, Prometheus, metrics-server, kube-state-metrics scripts:

https://github.com/DanWahlin/DockerAndKubernetesCourseCode/tree/master/samples/prometheus

Grafana, Prometheus, metrics-server, kube-state-metrics scripts:

https://github.com/DanWahlin/DockerAndKubernetesCourseCode/tree/master/samples/prometheus

edgecode.log:

edgecode.log:

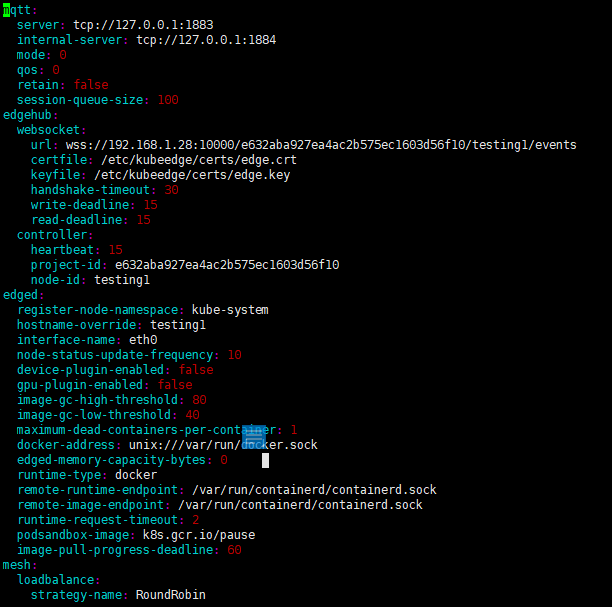

and my edge config file:

and my edge config file: