Environment

- NATS version: 2.2.6 with jetstream enabled

- Number of nodes nodes in the cluster : 3

- Deploy on OKD 3.11 by nats helm chart 0.8.0

Event description

- Getting jetstream stream info successfully, but failed on getting jetstream consumer info by natscli

- [Pub] OK, [Sub] Failed. NATS sub client can't connect to NATS cluster after 7/7 00:18

- The cluster has been running for more than a month, and there were no errors until 7/7. It was confirmed that there were no network or hardware problems.

- Attached logs and tried actions, please suggest other repair actions. Thanks.

NATS server logs

nats instance 0

[1] 2021/07/07 00:18:44.650787 [WRN] JetStream cluster stream '$G > MY-STREAM2' has NO quorum, stalled.

[1] 2021/07/07 00:18:44.651098 [WRN] JetStream cluster consumer '$G > MY-STREAM2 > consumer5' has NO quorum, stalled.

[1] 2021/07/07 00:18:47.433327 [INF] JetStream cluster new metadata leader

[1] 2021/07/07 00:18:47.930284 [INF] JetStream cluster new consumer leader for '$G > MY-STREAM2 > consumer5'

[1] 2021/07/07 00:18:51.306199 [WRN] JetStream cluster stream '$G > MY-STREAM' has NO quorum, stalled.

[1] 2021/07/07 00:18:51.652389 [WRN] JetStream cluster consumer '$G > MY-STREAM > consumer3' has NO quorum, stalled.

[1] 2021/07/07 00:18:56.555042 [INF] JetStream cluster new consumer leader for '$G > MY-STREAM > consumer2'

[1] 2021/07/07 00:19:00.462077 [INF] JetStream cluster new consumer leader for '$G > MY-STREAM > consumer3'

[1] 2021/07/07 00:19:00.870001 [WRN] Got stream sequence mismatch for '$G > MY-STREAM'

[1] 2021/07/07 00:19:01.024537 [WRN] Resetting stream '$G > MY-STREAM'

[1] 2021/07/07 00:19:01.292724 [INF] JetStream cluster new stream leader for '$G > MY-STREAM'

nats instance 1

[1] 2021/07/07 00:18:48.190309 [INF] JetStream cluster new stream leader for '$G > MY-STREAM2'

[1] 2021/07/07 00:18:53.343597 [INF] JetStream cluster new metadata leader

[1] 2021/07/07 00:18:56.820943 [INF] JetStream cluster new consumer leader for '$G > MY-STREAM2 > consumer5'

[1] 2021/07/07 00:18:57.098682 [INF] JetStream cluster new consumer leader for '$G > MY-STREAM > consumer1'

[1] 2021/07/07 00:18:57.572857 [INF] JetStream cluster new stream leader for '$G > MY-STREAM2'

[1] 2021/07/07 00:18:57.679975 [INF] JetStream cluster new stream leader for '$G > MY-STREAM'

[1] 2021/07/07 00:19:00.710121 [WRN] Got stream sequence mismatch for '$G > MY-STREAM'

[1] 2021/07/07 00:19:00.909870 [WRN] Resetting stream '$G > MY-STREAM'

[1] 2021/07/08 03:30:19.175389 [WRN] Did not receive all stream info results for "$G"

nats instance 2

[1] 2021/07/07 00:18:57.508614 [INF] JetStream cluster new consumer leader for '$G > MY-STREAM > consumer4'

[1] 2021/07/07 00:19:00.710399 [WRN] Got stream sequence mismatch for '$G > MY-STREAM'

[1] 2021/07/07 00:19:00.907675 [WRN] Resetting stream '$G > MY-STREAM'

Tried Actions

- Try to execute "nats consumer cluster step-down" [Failed]

nats consumer list MY-STREAM

# Consumers for Stream MY-STREAM:

# consumer1

# consumer2

# consumer3

# consumer4

nats consumer cluster step-down --trace

# 13:11:04 >>> $JS.API.STREAM.NAMES

# {"offset":0}

# 13:11:05 <<< $JS.API.STREAM.NAMES

# {"type":"io.nats.jetstream.api.v1.stream_names_response","total":2,"offset":0,"limit":1024,"streams":["MY-STREAM","MY-STREAM2"]}

# ? Select a Stream MY-STREAM

# 13:11:13 >>> $JS.API.CONSUMER.NAMES.MY-STREAM

# {"offset":0}

# 13:11:13 <<< $JS.API.CONSUMER.NAMES.MY-STREAM

# {"type":"io.nats.jetstream.api.v1.consumer_names_response","total":4,"offset":0,"limit":1024,"consumers":["consumer1","consumer2","consumer3","consumer4"]}

# ? Select a Consumer consumer2

# 13:11:16 >>> $JS.API.CONSUMER.INFO.MY-STREAM.consumer2

# 13:11:21 <<< $JS.API.CONSUMER.INFO.MY-STREAM.consumer2: context deadline exceeded

# nats.exe: error: context deadline exceeded, try --help

- Try to request CONSUMER STEPDOWN API directly [Failed]

nats req '$JS.API.CONSUMER.LEADER.STEPDOWN.MY-STREAM.consumer3' "" --trace

# 05:20:43 Sending request on "$JS.API.CONSUMER.LEADER.STEPDOWN.MY-STREAM.consumer3"

# nats: error: nats: timeout, try --help

- Try to restart NATS server [Still failed to get consumer]

kubectl rollout restart statefulset nats -n mynamespace

nats con info --trace

# 05:43:02 >>> $JS.API.STREAM.NAMES

# {"offset":0}

# 05:43:02 <<< $JS.API.STREAM.NAMES

# {"type":"io.nats.jetstream.api.v1.stream_names_response","total":2,"offset":0,"limit":1024,"streams":["MY-STREAM","MY-STREAM2"]}

# ? Select a Stream MY-STREAM

# 05:43:03 >>> $JS.API.CONSUMER.NAMES.MY-STREAM

# {"offset":0}

# 05:43:03 <<< $JS.API.CONSUMER.NAMES.MY-STREAM

# {"type":"io.nats.jetstream.api.v1.consumer_names_response","total":4,"offset":0,"limit":1024,"consumers":["consumer1","consumer2","consumer3","consumer4"]}

# ? Select a Consumer consumer1

# 05:43:05 >>> $JS.API.CONSUMER.INFO.MY-STREAM.consumer1

# 05:43:05 <<< $JS.API.CONSUMER.INFO.MY-STREAM.consumer1

# {"type":"io.nats.jetstream.api.v1.consumer_info_response","error":{"code":503,"description":"JetStream system temporarily unavailable"}}

# nats: error: could not load Consumer MY-STREAM > consumer1: JetStream system temporarily unavailable

nats-0 server have a lot of JetStream WRAN logs

[1] 2021/07/08 05:40:33.345825 [WRN] JetStream cluster consumer '$G > MY-STREAM > consumer3' has NO quorum, stalled.

[1] 2021/07/08 05:40:34.027116 [WRN] JetStream cluster consumer '$G > MY-STREAM > consumer2' has NO quorum, stalled.

[1] 2021/07/08 05:40:34.542920 [WRN] JetStream cluster consumer '$G > MY-STREAM > consumer1' has NO quorum, stalled.

[1] 2021/07/08 05:40:35.494354 [WRN] JetStream cluster consumer '$G > MY-STREAM > consumer4' has NO quorum, stalled.

[1] 2021/07/08 05:40:55.586260 [WRN] JetStream cluster consumer '$G > MY-STREAM > consumer4' has NO quorum, stalled.

[1] 2021/07/08 05:40:57.300211 [WRN] JetStream cluster consumer '$G > MY-STREAM > consumer1' has NO quorum, stalled.

[1] 2021/07/08 05:40:58.005908 [WRN] JetStream cluster consumer '$G > MY-STREAM > consumer3' has NO quorum, stalled.

[1] 2021/07/08 05:40:58.324828 [WRN] JetStream cluster consumer '$G > MY-STREAM > consumer2' has NO quorum, stalled.

[1] 2021/07/08 05:41:16.664240 [WRN] JetStream cluster consumer '$G > MY-STREAM > consumer4' has NO quorum, stalled.

[1] 2021/07/08 05:41:17.659280 [WRN] JetStream cluster consumer '$G > MY-STREAM > consumer1' has NO quorum, stalled.

[1] 2021/07/08 05:41:20.245055 [WRN] JetStream cluster consumer '$G > MY-STREAM > consumer3' has NO quorum, stalled.

NATS stream report have MY-STREAM nats-0 failed status

nats stream report

Obtaining Stream stats

+--------------------------------------------------------------------------------------------------------------------+

| Stream Report |

+-----------------------------+---------+-----------+----------+---------+------+---------+--------------------------+

| Stream | Storage | Consumers | Messages | Bytes | Lost | Deleted | Replicas |

+-----------------------------+---------+-----------+----------+---------+------+---------+--------------------------+

| MY-STREAM2 | File | 1 | 0 | 0 B | 0 | 0 | nats-0, nats-1, nats-2* |

| MY-STREAM | File | 0 | 500 | 3.9 MiB | 0 | 0 | nats-0!, nats-1, nats-2* |

+-----------------------------+---------+-----------+----------+---------+------+---------+--------------------------+

- Try to remove nats-0 peer for MY-STREAM [Failed]

nats stream cluster peer-remove

# ? Select a Stream MY-STREAM

# ? Select a Peer nats-0

# 06:16:31 Removing peer "nats-0"

# nats: error: peer remap failed, try --help

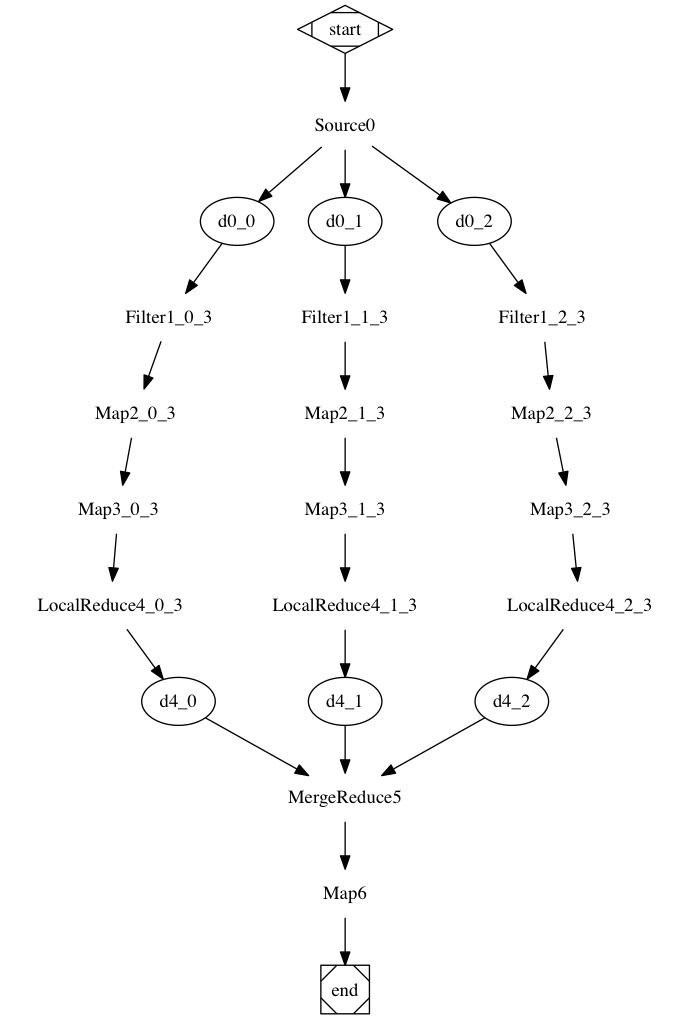

Sync blocking graph:

Sync blocking graph: