What steps did you take and what happened:

Hey!

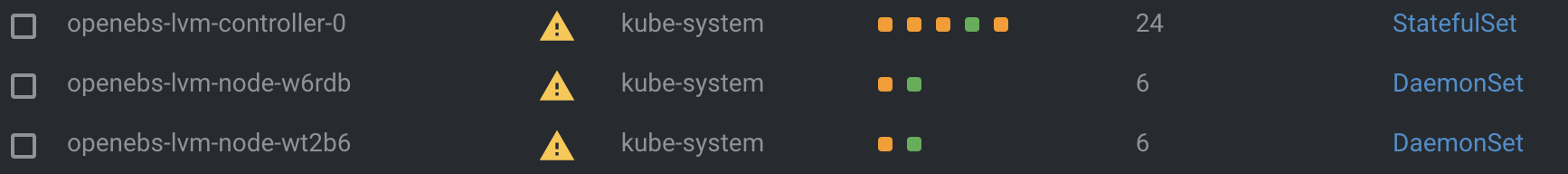

I successfully installed OpenEBS Operator to my Kubernetes cluster recently provisioned with Kubespray.

Also successfully created 2 Storage Classes: for ext4 and xfs file systems:

I'm using xfs file system for mongodb as recommended one and default ext4 for other PVCs.

ext4 PVC was successfully created and mounted to desired pod but xfs is failing to mount.

What did you expect to happen:

PVC with xfs file system successfully created and mounted to desired mongodb pod

The output of the following commands will help us better understand what's going on:

(Pasting long output into a GitHub gist or other Pastebin is fine.)

kubectl logs -f openebs-lvm-node- -n lvm -c openebs-lvm-plugin

I1121 18:59:58.749533 1 main.go:136] LVM Driver Version :- develop-098a38e:07-06-2022 - commit :- 098a38ea83d2f554ce060c5ba449c345e5a46c8c

I1121 18:59:58.749603 1 main.go:137] DriverName: local.csi.openebs.io Plugin: agent EndPoint: unix:///plugin/csi.sock NodeID: mic101-06 SetIOLimits: false ContainerRuntime: containerd RIopsPerGB: [] WIopsPerGB: [] RBpsPerGB: [] WBpsPerGB: []

I1121 18:59:58.749625 1 driver.go:48] enabling volume access mode: SINGLE_NODE_WRITER

I1121 18:59:58.750463 1 grpc.go:190] Listening for connections on address: &net.UnixAddr{Name:"//plugin/csi.sock", Net:"unix"}

I1121 18:59:58.751744 1 builder.go:83] Creating event broadcaster

I1121 18:59:58.751842 1 builder.go:89] Creating lvm volume controller object

I1121 18:59:58.751875 1 builder.go:99] Adding Event handler functions for lvm volume controller

I1121 18:59:58.751909 1 start.go:70] Starting informer for lvm volume controller

I1121 18:59:58.751916 1 start.go:72] Starting Lvm volume controller

I1121 18:59:58.751922 1 volume.go:294] Starting Vol controller

I1121 18:59:58.751925 1 volume.go:297] Waiting for informer caches to sync

I1121 18:59:58.752506 1 builder.go:83] Creating event broadcaster

I1121 18:59:58.752572 1 builder.go:89] Creating lvm snapshot controller object

I1121 18:59:58.752589 1 builder.go:98] Adding Event handler functions for lvm snapshot controller

I1121 18:59:58.752855 1 start.go:70] Starting informer for lvm snapshot controller

I1121 18:59:58.752870 1 start.go:72] Starting Lvm snapshot controller

I1121 18:59:58.752876 1 snapshot.go:194] Starting Snap controller

I1121 18:59:58.752880 1 snapshot.go:197] Waiting for informer caches to sync

I1121 18:59:58.764412 1 builder.go:93] Creating lvm node controller object

I1121 18:59:58.764448 1 builder.go:105] Adding Event handler functions for lvm node controller

I1121 18:59:58.764469 1 start.go:95] Starting informer for lvm node controller

I1121 18:59:58.764484 1 start.go:98] Starting Lvm node controller

I1121 18:59:58.764493 1 lvmnode.go:222] Starting Node controller

I1121 18:59:58.764498 1 lvmnode.go:225] Waiting for informer caches to sync

I1121 18:59:58.767065 1 lvmnode.go:152] Got add event for lvm node lvm/mic101-06

I1121 18:59:58.852084 1 volume.go:301] Starting Vol workers

I1121 18:59:58.852140 1 volume.go:308] Started Vol workers

I1121 18:59:58.854714 1 snapshot.go:201] Starting Snap workers

I1121 18:59:58.854743 1 snapshot.go:208] Started Snap workers

I1121 18:59:58.864631 1 lvmnode.go:230] Starting Node workers

I1121 18:59:58.864653 1 lvmnode.go:237] Started Node workers

I1121 18:59:58.900721 1 lvmnode.go:305] Successfully synced 'lvm/mic101-06'

I1121 18:59:59.346070 1 grpc.go:72] GRPC call: /csi.v1.Identity/GetPluginInfo requests {}

I1121 18:59:59.347186 1 grpc.go:81] GRPC response: {"name":"local.csi.openebs.io","vendor_version":"develop-098a38e:07-06-2022"}

I1121 19:00:00.318319 1 grpc.go:72] GRPC call: /csi.v1.Node/NodeGetInfo requests {}

I1121 19:00:00.322318 1 grpc.go:81] GRPC response: {"accessible_topology":{"segments":{"kubernetes.io/hostname":"mic101-06","openebs.io/nodename":"mic101-06"}},"node_id":"mic101-06"}

I1121 19:00:58.888204 1 lvmnode.go:305] Successfully synced 'lvm/mic101-06'

I1121 19:01:19.070229 1 volume.go:84] Got add event for Vol pvc-b4e5b534-300f-460c-ba01-92e312ce6b40

I1121 19:01:19.070245 1 volume.go:85] lvmvolume object to be enqueued by Add handler: &{{LVMVolume local.openebs.io/v1alpha1} {pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 lvm f95e7f84-ce74-484d-b4d5-d81352d885d3 171987511 1 2022-11-21 19:01:19 +0000 UTC <nil> <nil> map[] map[] [] [] [{lvm-driver Update local.openebs.io/v1alpha1 2022-11-21 19:01:19 +0000 UTC FieldsV1 {"f:spec":{".":{},"f:capacity":{},"f:ownerNodeID":{},"f:shared":{},"f:thinProvision":{},"f:vgPattern":{},"f:volGroup":{}},"f:status":{".":{},"f:state":{}}}}]} {mic101-06 ^lvmvg$ 21474836480 no no} {Pending <nil>}}

I1121 19:01:19.070363 1 volume.go:54] Getting lvmvol object name:pvc-b4e5b534-300f-460c-ba01-92e312ce6b40, ns:lvm from cache

I1121 19:01:19.145099 1 lvm_util.go:287] lvm: created volume lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40

I1121 19:01:19.154097 1 volume.go:366] Successfully synced 'lvm/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40'

I1121 19:01:20.428406 1 grpc.go:72] GRPC call: /csi.v1.Node/NodePublishVolume requests {"target_path":"/var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount","volume_capability":{"AccessType":{"Mount":{"fs_type":"xfs"}},"access_mode":{"mode":1}},"volume_context":{"csi.storage.k8s.io/ephemeral":"false","csi.storage.k8s.io/pod.name":"mongodb-0","csi.storage.k8s.io/pod.namespace":"mongodb","csi.storage.k8s.io/pod.uid":"d47a5fc6-1bd9-45a8-a61c-c97473092a9d","csi.storage.k8s.io/serviceAccount.name":"mongodb","openebs.io/cas-type":"localpv-lvm","openebs.io/volgroup":"lvmvg","storage.kubernetes.io/csiProvisionerIdentity":"1669057199601-8081-local.csi.openebs.io"},"volume_id":"pvc-b4e5b534-300f-460c-ba01-92e312ce6b40"}

I1121 19:01:20.448698 1 mount_linux.go:366] Disk "/dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40" appears to be unformatted, attempting to format as type: "xfs" with options: [/dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40]

I1121 19:01:20.545574 1 mount_linux.go:376] Disk successfully formatted (mkfs): xfs - /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

E1121 19:01:20.549099 1 mount_linux.go:150] Mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:01:20.549167 1 mount.go:72] lvm: failed to mount volume /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 [xfs] to /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount, error mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:01:20.549231 1 grpc.go:79] GRPC error: rpc error: code = Internal desc = failed to format and mount the volume error: mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

I1121 19:01:21.130985 1 grpc.go:72] GRPC call: /csi.v1.Node/NodePublishVolume requests {"target_path":"/var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount","volume_capability":{"AccessType":{"Mount":{"fs_type":"xfs"}},"access_mode":{"mode":1}},"volume_context":{"csi.storage.k8s.io/ephemeral":"false","csi.storage.k8s.io/pod.name":"mongodb-0","csi.storage.k8s.io/pod.namespace":"mongodb","csi.storage.k8s.io/pod.uid":"d47a5fc6-1bd9-45a8-a61c-c97473092a9d","csi.storage.k8s.io/serviceAccount.name":"mongodb","openebs.io/cas-type":"localpv-lvm","openebs.io/volgroup":"lvmvg","storage.kubernetes.io/csiProvisionerIdentity":"1669057199601-8081-local.csi.openebs.io"},"volume_id":"pvc-b4e5b534-300f-460c-ba01-92e312ce6b40"}

E1121 19:01:21.173108 1 mount_linux.go:150] Mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:01:21.173140 1 mount.go:72] lvm: failed to mount volume /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 [xfs] to /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount, error mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:01:21.173163 1 grpc.go:79] GRPC error: rpc error: code = Internal desc = failed to format and mount the volume error: mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

I1121 19:01:22.238272 1 grpc.go:72] GRPC call: /csi.v1.Node/NodePublishVolume requests {"target_path":"/var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount","volume_capability":{"AccessType":{"Mount":{"fs_type":"xfs"}},"access_mode":{"mode":1}},"volume_context":{"csi.storage.k8s.io/ephemeral":"false","csi.storage.k8s.io/pod.name":"mongodb-0","csi.storage.k8s.io/pod.namespace":"mongodb","csi.storage.k8s.io/pod.uid":"d47a5fc6-1bd9-45a8-a61c-c97473092a9d","csi.storage.k8s.io/serviceAccount.name":"mongodb","openebs.io/cas-type":"localpv-lvm","openebs.io/volgroup":"lvmvg","storage.kubernetes.io/csiProvisionerIdentity":"1669057199601-8081-local.csi.openebs.io"},"volume_id":"pvc-b4e5b534-300f-460c-ba01-92e312ce6b40"}

E1121 19:01:22.279818 1 mount_linux.go:150] Mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:01:22.279848 1 mount.go:72] lvm: failed to mount volume /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 [xfs] to /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount, error mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:01:22.279891 1 grpc.go:79] GRPC error: rpc error: code = Internal desc = failed to format and mount the volume error: mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

I1121 19:01:24.358032 1 grpc.go:72] GRPC call: /csi.v1.Node/NodePublishVolume requests {"target_path":"/var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount","volume_capability":{"AccessType":{"Mount":{"fs_type":"xfs"}},"access_mode":{"mode":1}},"volume_context":{"csi.storage.k8s.io/ephemeral":"false","csi.storage.k8s.io/pod.name":"mongodb-0","csi.storage.k8s.io/pod.namespace":"mongodb","csi.storage.k8s.io/pod.uid":"d47a5fc6-1bd9-45a8-a61c-c97473092a9d","csi.storage.k8s.io/serviceAccount.name":"mongodb","openebs.io/cas-type":"localpv-lvm","openebs.io/volgroup":"lvmvg","storage.kubernetes.io/csiProvisionerIdentity":"1669057199601-8081-local.csi.openebs.io"},"volume_id":"pvc-b4e5b534-300f-460c-ba01-92e312ce6b40"}

E1121 19:01:24.399898 1 mount_linux.go:150] Mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:01:24.399936 1 mount.go:72] lvm: failed to mount volume /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 [xfs] to /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount, error mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:01:24.399960 1 grpc.go:79] GRPC error: rpc error: code = Internal desc = failed to format and mount the volume error: mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

I1121 19:01:28.482745 1 grpc.go:72] GRPC call: /csi.v1.Node/NodePublishVolume requests {"target_path":"/var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount","volume_capability":{"AccessType":{"Mount":{"fs_type":"xfs"}},"access_mode":{"mode":1}},"volume_context":{"csi.storage.k8s.io/ephemeral":"false","csi.storage.k8s.io/pod.name":"mongodb-0","csi.storage.k8s.io/pod.namespace":"mongodb","csi.storage.k8s.io/pod.uid":"d47a5fc6-1bd9-45a8-a61c-c97473092a9d","csi.storage.k8s.io/serviceAccount.name":"mongodb","openebs.io/cas-type":"localpv-lvm","openebs.io/volgroup":"lvmvg","storage.kubernetes.io/csiProvisionerIdentity":"1669057199601-8081-local.csi.openebs.io"},"volume_id":"pvc-b4e5b534-300f-460c-ba01-92e312ce6b40"}

E1121 19:01:28.524674 1 mount_linux.go:150] Mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:01:28.524711 1 mount.go:72] lvm: failed to mount volume /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 [xfs] to /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount, error mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:01:28.524734 1 grpc.go:79] GRPC error: rpc error: code = Internal desc = failed to format and mount the volume error: mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

I1121 19:01:36.536941 1 grpc.go:72] GRPC call: /csi.v1.Node/NodePublishVolume requests {"target_path":"/var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount","volume_capability":{"AccessType":{"Mount":{"fs_type":"xfs"}},"access_mode":{"mode":1}},"volume_context":{"csi.storage.k8s.io/ephemeral":"false","csi.storage.k8s.io/pod.name":"mongodb-0","csi.storage.k8s.io/pod.namespace":"mongodb","csi.storage.k8s.io/pod.uid":"d47a5fc6-1bd9-45a8-a61c-c97473092a9d","csi.storage.k8s.io/serviceAccount.name":"mongodb","openebs.io/cas-type":"localpv-lvm","openebs.io/volgroup":"lvmvg","storage.kubernetes.io/csiProvisionerIdentity":"1669057199601-8081-local.csi.openebs.io"},"volume_id":"pvc-b4e5b534-300f-460c-ba01-92e312ce6b40"}

E1121 19:01:36.577764 1 mount_linux.go:150] Mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:01:36.577790 1 mount.go:72] lvm: failed to mount volume /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 [xfs] to /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount, error mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:01:36.577813 1 grpc.go:79] GRPC error: rpc error: code = Internal desc = failed to format and mount the volume error: mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

I1121 19:01:52.648665 1 grpc.go:72] GRPC call: /csi.v1.Node/NodePublishVolume requests {"target_path":"/var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount","volume_capability":{"AccessType":{"Mount":{"fs_type":"xfs"}},"access_mode":{"mode":1}},"volume_context":{"csi.storage.k8s.io/ephemeral":"false","csi.storage.k8s.io/pod.name":"mongodb-0","csi.storage.k8s.io/pod.namespace":"mongodb","csi.storage.k8s.io/pod.uid":"d47a5fc6-1bd9-45a8-a61c-c97473092a9d","csi.storage.k8s.io/serviceAccount.name":"mongodb","openebs.io/cas-type":"localpv-lvm","openebs.io/volgroup":"lvmvg","storage.kubernetes.io/csiProvisionerIdentity":"1669057199601-8081-local.csi.openebs.io"},"volume_id":"pvc-b4e5b534-300f-460c-ba01-92e312ce6b40"}

E1121 19:01:52.689956 1 mount_linux.go:150] Mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:01:52.689990 1 mount.go:72] lvm: failed to mount volume /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 [xfs] to /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount, error mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:01:52.690011 1 grpc.go:79] GRPC error: rpc error: code = Internal desc = failed to format and mount the volume error: mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

I1121 19:01:58.902968 1 lvmnode.go:109] lvm node controller: node volume groups updated current=[{Name:appvg UUID:5gc1Kp-y6uY-d5Ks-XToJ-c0c1-ie3K-Vuwo5G Size:{i:{value:214744170496 scale:0} d:{Dec:<nil>} s:204796Mi Format:BinarySI} Free:{i:{value:32208060416 scale:0} d:{Dec:<nil>} s:30716Mi Format:BinarySI} LVCount:2 PVCount:1 MaxLV:0 MaxPV:0 SnapCount:0 MissingPVCount:0 MetadataCount:1 MetadataUsedCount:1 MetadataFree:{i:{value:520192 scale:0} d:{Dec:<nil>} s:508Ki Format:BinarySI} MetadataSize:{i:{value:1044480 scale:0} d:{Dec:<nil>} s:1020Ki Format:BinarySI} Permission:0 AllocationPolicy:0} {Name:centos UUID:ftZc0y-rq2X-AUi6-R6P7-Dp98-tb2Z-g5c9Vg Size:{i:{value:61845012480 scale:0} d:{Dec:<nil>} s:58980Mi Format:BinarySI} Free:{i:{value:4194304 scale:0} d:{Dec:<nil>} s:4Mi Format:BinarySI} LVCount:3 PVCount:1 MaxLV:0 MaxPV:0 SnapCount:0 MissingPVCount:0 MetadataCount:1 MetadataUsedCount:1 MetadataFree:{i:{value:519680 scale:0} d:{Dec:<nil>} s:519680 Format:DecimalSI} MetadataSize:{i:{value:1044480 scale:0} d:{Dec:<nil>} s:1020Ki Format:BinarySI} Permission:0 AllocationPolicy:0} {Name:lvmvg UUID:uJlqG9-SzzU-6O5j-MMlt-uVjq-AHFi-BX3hoe Size:{i:{value:1073737629696 scale:0} d:{Dec:<nil>} s:1023996Mi Format:BinarySI} Free:{i:{value:1073737629696 scale:0} d:{Dec:<nil>} s:1023996Mi Format:BinarySI} LVCount:0 PVCount:1 MaxLV:0 MaxPV:0 SnapCount:0 MissingPVCount:0 MetadataCount:1 MetadataUsedCount:1 MetadataFree:{i:{value:520704 scale:0} d:{Dec:<nil>} s:520704 Format:DecimalSI} MetadataSize:{i:{value:1044480 scale:0} d:{Dec:<nil>} s:1020Ki Format:BinarySI} Permission:0 AllocationPolicy:0}], required=[{Name:appvg UUID:5gc1Kp-y6uY-d5Ks-XToJ-c0c1-ie3K-Vuwo5G Size:{i:{value:214744170496 scale:0} d:{Dec:<nil>} s: Format:BinarySI} Free:{i:{value:32208060416 scale:0} d:{Dec:<nil>} s: Format:BinarySI} LVCount:2 PVCount:1 MaxLV:0 MaxPV:0 SnapCount:0 MissingPVCount:0 MetadataCount:1 MetadataUsedCount:1 MetadataFree:{i:{value:520192 scale:0} d:{Dec:<nil>} s: Format:BinarySI} MetadataSize:{i:{value:1044480 scale:0} d:{Dec:<nil>} s: Format:BinarySI} Permission:0 AllocationPolicy:0} {Name:centos UUID:ftZc0y-rq2X-AUi6-R6P7-Dp98-tb2Z-g5c9Vg Size:{i:{value:61845012480 scale:0} d:{Dec:<nil>} s: Format:BinarySI} Free:{i:{value:4194304 scale:0} d:{Dec:<nil>} s: Format:BinarySI} LVCount:3 PVCount:1 MaxLV:0 MaxPV:0 SnapCount:0 MissingPVCount:0 MetadataCount:1 MetadataUsedCount:1 MetadataFree:{i:{value:519680 scale:0} d:{Dec:<nil>} s: Format:BinarySI} MetadataSize:{i:{value:1044480 scale:0} d:{Dec:<nil>} s: Format:BinarySI} Permission:0 AllocationPolicy:0} {Name:lvmvg UUID:uJlqG9-SzzU-6O5j-MMlt-uVjq-AHFi-BX3hoe Size:{i:{value:1073737629696 scale:0} d:{Dec:<nil>} s: Format:BinarySI} Free:{i:{value:1052262793216 scale:0} d:{Dec:<nil>} s: Format:BinarySI} LVCount:1 PVCount:1 MaxLV:0 MaxPV:0 SnapCount:0 MissingPVCount:0 MetadataCount:1 MetadataUsedCount:1 MetadataFree:{i:{value:520192 scale:0} d:{Dec:<nil>} s: Format:BinarySI} MetadataSize:{i:{value:1044480 scale:0} d:{Dec:<nil>} s: Format:BinarySI} Permission:0 AllocationPolicy:0}]

I1121 19:01:58.903087 1 lvmnode.go:119] lvm node controller: updating node object with &{TypeMeta:{Kind:LVMNode APIVersion:local.openebs.io/v1alpha1} ObjectMeta:{Name:mic101-06 GenerateName: Namespace:lvm SelfLink: UID:be51d8cb-b50b-45e1-84e8-4d0779af27d7 ResourceVersion:171986177 Generation:3 CreationTimestamp:2022-11-21 18:11:49 +0000 UTC DeletionTimestamp:<nil> DeletionGracePeriodSeconds:<nil> Labels:map[] Annotations:map[] OwnerReferences:[{APIVersion:v1 Kind:Node Name:mic101-06 UID:a6d194c4-db2a-44ac-95c5-a4a2bd457659 Controller:0xc000134c08 BlockOwnerDeletion:<nil>}] Finalizers:[] ClusterName: ManagedFields:[{Manager:lvm-driver Operation:Update APIVersion:local.openebs.io/v1alpha1 Time:2022-11-21 18:57:49 +0000 UTC FieldsType:FieldsV1 FieldsV1:{"f:metadata":{"f:ownerReferences":{".":{},"k:{\"uid\":\"a6d194c4-db2a-44ac-95c5-a4a2bd457659\"}":{}}},"f:volumeGroups":{}}}]} VolumeGroups:[{Name:appvg UUID:5gc1Kp-y6uY-d5Ks-XToJ-c0c1-ie3K-Vuwo5G Size:{i:{value:214744170496 scale:0} d:{Dec:<nil>} s: Format:BinarySI} Free:{i:{value:32208060416 scale:0} d:{Dec:<nil>} s: Format:BinarySI} LVCount:2 PVCount:1 MaxLV:0 MaxPV:0 SnapCount:0 MissingPVCount:0 MetadataCount:1 MetadataUsedCount:1 MetadataFree:{i:{value:520192 scale:0} d:{Dec:<nil>} s: Format:BinarySI} MetadataSize:{i:{value:1044480 scale:0} d:{Dec:<nil>} s: Format:BinarySI} Permission:0 AllocationPolicy:0} {Name:centos UUID:ftZc0y-rq2X-AUi6-R6P7-Dp98-tb2Z-g5c9Vg Size:{i:{value:61845012480 scale:0} d:{Dec:<nil>} s: Format:BinarySI} Free:{i:{value:4194304 scale:0} d:{Dec:<nil>} s: Format:BinarySI} LVCount:3 PVCount:1 MaxLV:0 MaxPV:0 SnapCount:0 MissingPVCount:0 MetadataCount:1 MetadataUsedCount:1 MetadataFree:{i:{value:519680 scale:0} d:{Dec:<nil>} s: Format:BinarySI} MetadataSize:{i:{value:1044480 scale:0} d:{Dec:<nil>} s: Format:BinarySI} Permission:0 AllocationPolicy:0} {Name:lvmvg UUID:uJlqG9-SzzU-6O5j-MMlt-uVjq-AHFi-BX3hoe Size:{i:{value:1073737629696 scale:0} d:{Dec:<nil>} s: Format:BinarySI} Free:{i:{value:1052262793216 scale:0} d:{Dec:<nil>} s: Format:BinarySI} LVCount:1 PVCount:1 MaxLV:0 MaxPV:0 SnapCount:0 MissingPVCount:0 MetadataCount:1 MetadataUsedCount:1 MetadataFree:{i:{value:520192 scale:0} d:{Dec:<nil>} s: Format:BinarySI} MetadataSize:{i:{value:1044480 scale:0} d:{Dec:<nil>} s: Format:BinarySI} Permission:0 AllocationPolicy:0}]}

I1121 19:01:58.911452 1 lvmnode.go:164] Got update event for lvm node lvm/mic101-06

I1121 19:01:58.911482 1 lvmnode.go:123] lvm node controller: updated node object lvm/mic101-06

I1121 19:01:58.911513 1 lvmnode.go:305] Successfully synced 'lvm/mic101-06'

I1121 19:01:58.935029 1 lvmnode.go:305] Successfully synced 'lvm/mic101-06'

I1121 19:02:24.754761 1 grpc.go:72] GRPC call: /csi.v1.Node/NodePublishVolume requests {"target_path":"/var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount","volume_capability":{"AccessType":{"Mount":{"fs_type":"xfs"}},"access_mode":{"mode":1}},"volume_context":{"csi.storage.k8s.io/ephemeral":"false","csi.storage.k8s.io/pod.name":"mongodb-0","csi.storage.k8s.io/pod.namespace":"mongodb","csi.storage.k8s.io/pod.uid":"d47a5fc6-1bd9-45a8-a61c-c97473092a9d","csi.storage.k8s.io/serviceAccount.name":"mongodb","openebs.io/cas-type":"localpv-lvm","openebs.io/volgroup":"lvmvg","storage.kubernetes.io/csiProvisionerIdentity":"1669057199601-8081-local.csi.openebs.io"},"volume_id":"pvc-b4e5b534-300f-460c-ba01-92e312ce6b40"}

E1121 19:02:24.794281 1 mount_linux.go:150] Mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:02:24.794312 1 mount.go:72] lvm: failed to mount volume /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 [xfs] to /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount, error mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:02:24.794331 1 grpc.go:79] GRPC error: rpc error: code = Internal desc = failed to format and mount the volume error: mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

I1121 19:02:58.887829 1 lvmnode.go:305] Successfully synced 'lvm/mic101-06'

I1121 19:03:28.847816 1 grpc.go:72] GRPC call: /csi.v1.Node/NodePublishVolume requests {"target_path":"/var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount","volume_capability":{"AccessType":{"Mount":{"fs_type":"xfs"}},"access_mode":{"mode":1}},"volume_context":{"csi.storage.k8s.io/ephemeral":"false","csi.storage.k8s.io/pod.name":"mongodb-0","csi.storage.k8s.io/pod.namespace":"mongodb","csi.storage.k8s.io/pod.uid":"d47a5fc6-1bd9-45a8-a61c-c97473092a9d","csi.storage.k8s.io/serviceAccount.name":"mongodb","openebs.io/cas-type":"localpv-lvm","openebs.io/volgroup":"lvmvg","storage.kubernetes.io/csiProvisionerIdentity":"1669057199601-8081-local.csi.openebs.io"},"volume_id":"pvc-b4e5b534-300f-460c-ba01-92e312ce6b40"}

E1121 19:03:28.890064 1 mount_linux.go:150] Mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:03:28.890100 1 mount.go:72] lvm: failed to mount volume /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 [xfs] to /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount, error mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:03:28.890126 1 grpc.go:79] GRPC error: rpc error: code = Internal desc = failed to format and mount the volume error: mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

I1121 19:03:58.886290 1 lvmnode.go:305] Successfully synced 'lvm/mic101-06'

I1121 19:04:58.766200 1 lvmnode.go:164] Got update event for lvm node lvm/mic101-06

I1121 19:04:58.790195 1 lvmnode.go:305] Successfully synced 'lvm/mic101-06'

I1121 19:04:58.886993 1 lvmnode.go:305] Successfully synced 'lvm/mic101-06'

I1121 19:05:30.897259 1 grpc.go:72] GRPC call: /csi.v1.Node/NodePublishVolume requests {"target_path":"/var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount","volume_capability":{"AccessType":{"Mount":{"fs_type":"xfs"}},"access_mode":{"mode":1}},"volume_context":{"csi.storage.k8s.io/ephemeral":"false","csi.storage.k8s.io/pod.name":"mongodb-0","csi.storage.k8s.io/pod.namespace":"mongodb","csi.storage.k8s.io/pod.uid":"d47a5fc6-1bd9-45a8-a61c-c97473092a9d","csi.storage.k8s.io/serviceAccount.name":"mongodb","openebs.io/cas-type":"localpv-lvm","openebs.io/volgroup":"lvmvg","storage.kubernetes.io/csiProvisionerIdentity":"1669057199601-8081-local.csi.openebs.io"},"volume_id":"pvc-b4e5b534-300f-460c-ba01-92e312ce6b40"}

E1121 19:05:30.938835 1 mount_linux.go:150] Mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:05:30.938865 1 mount.go:72] lvm: failed to mount volume /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 [xfs] to /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount, error mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:05:30.938892 1 grpc.go:79] GRPC error: rpc error: code = Internal desc = failed to format and mount the volume error: mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

I1121 19:05:58.884792 1 lvmnode.go:305] Successfully synced 'lvm/mic101-06'

I1121 19:06:58.891057 1 lvmnode.go:305] Successfully synced 'lvm/mic101-06'

I1121 19:07:32.953618 1 grpc.go:72] GRPC call: /csi.v1.Node/NodePublishVolume requests {"target_path":"/var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount","volume_capability":{"AccessType":{"Mount":{"fs_type":"xfs"}},"access_mode":{"mode":1}},"volume_context":{"csi.storage.k8s.io/ephemeral":"false","csi.storage.k8s.io/pod.name":"mongodb-0","csi.storage.k8s.io/pod.namespace":"mongodb","csi.storage.k8s.io/pod.uid":"d47a5fc6-1bd9-45a8-a61c-c97473092a9d","csi.storage.k8s.io/serviceAccount.name":"mongodb","openebs.io/cas-type":"localpv-lvm","openebs.io/volgroup":"lvmvg","storage.kubernetes.io/csiProvisionerIdentity":"1669057199601-8081-local.csi.openebs.io"},"volume_id":"pvc-b4e5b534-300f-460c-ba01-92e312ce6b40"}

E1121 19:07:32.994708 1 mount_linux.go:150] Mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:07:32.994744 1 mount.go:72] lvm: failed to mount volume /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 [xfs] to /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount, error mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:07:32.994765 1 grpc.go:79] GRPC error: rpc error: code = Internal desc = failed to format and mount the volume error: mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

I1121 19:07:58.889021 1 lvmnode.go:305] Successfully synced 'lvm/mic101-06'

I1121 19:08:58.887854 1 lvmnode.go:305] Successfully synced 'lvm/mic101-06'

I1121 19:09:35.000722 1 grpc.go:72] GRPC call: /csi.v1.Node/NodePublishVolume requests {"target_path":"/var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount","volume_capability":{"AccessType":{"Mount":{"fs_type":"xfs"}},"access_mode":{"mode":1}},"volume_context":{"csi.storage.k8s.io/ephemeral":"false","csi.storage.k8s.io/pod.name":"mongodb-0","csi.storage.k8s.io/pod.namespace":"mongodb","csi.storage.k8s.io/pod.uid":"d47a5fc6-1bd9-45a8-a61c-c97473092a9d","csi.storage.k8s.io/serviceAccount.name":"mongodb","openebs.io/cas-type":"localpv-lvm","openebs.io/volgroup":"lvmvg","storage.kubernetes.io/csiProvisionerIdentity":"1669057199601-8081-local.csi.openebs.io"},"volume_id":"pvc-b4e5b534-300f-460c-ba01-92e312ce6b40"}

E1121 19:09:35.040308 1 mount_linux.go:150] Mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:09:35.040336 1 mount.go:72] lvm: failed to mount volume /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 [xfs] to /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount, error mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:09:35.040354 1 grpc.go:79] GRPC error: rpc error: code = Internal desc = failed to format and mount the volume error: mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

I1121 19:09:58.766142 1 lvmnode.go:164] Got update event for lvm node lvm/mic101-06

I1121 19:09:58.790145 1 lvmnode.go:305] Successfully synced 'lvm/mic101-06'

I1121 19:09:58.888949 1 lvmnode.go:305] Successfully synced 'lvm/mic101-06'

I1121 19:10:58.891155 1 lvmnode.go:305] Successfully synced 'lvm/mic101-06'

I1121 19:11:37.128692 1 grpc.go:72] GRPC call: /csi.v1.Node/NodePublishVolume requests {"target_path":"/var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount","volume_capability":{"AccessType":{"Mount":{"fs_type":"xfs"}},"access_mode":{"mode":1}},"volume_context":{"csi.storage.k8s.io/ephemeral":"false","csi.storage.k8s.io/pod.name":"mongodb-0","csi.storage.k8s.io/pod.namespace":"mongodb","csi.storage.k8s.io/pod.uid":"d47a5fc6-1bd9-45a8-a61c-c97473092a9d","csi.storage.k8s.io/serviceAccount.name":"mongodb","openebs.io/cas-type":"localpv-lvm","openebs.io/volgroup":"lvmvg","storage.kubernetes.io/csiProvisionerIdentity":"1669057199601-8081-local.csi.openebs.io"},"volume_id":"pvc-b4e5b534-300f-460c-ba01-92e312ce6b40"}

E1121 19:11:37.170337 1 mount_linux.go:150] Mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:11:37.170404 1 mount.go:72] lvm: failed to mount volume /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 [xfs] to /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount, error mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

E1121 19:11:37.170425 1 grpc.go:79] GRPC error: rpc error: code = Internal desc = failed to format and mount the volume error: mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40 /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount

Output: mount: /var/lib/kubelet/pods/d47a5fc6-1bd9-45a8-a61c-c97473092a9d/volumes/kubernetes.io~csi/pvc-b4e5b534-300f-460c-ba01-92e312ce6b40/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40, missing codepage or helper program, or other error.

NAME READY STATUS RESTARTS AGE

openebs-lvm-controller-0 5/5 Running 0 15m

openebs-lvm-node-cc4lh 2/2 Running 0 15m

openebs-lvm-node-grj6p 2/2 Running 0 15m

openebs-lvm-node-mp9f6 2/2 Running 0 15m

openebs-lvm-node-p8nmh 2/2 Running 0 15m

openebs-lvm-node-t7nvj 2/2 Running 0 15m

openebs-lvm-node-xfg26 2/2 Running 0 15m

openebs-lvm-node-z5l7d 2/2 Running 0 15m

openebs-lvm-node-zcsvh 2/2 Running 0 15m

kubectl get lvmvol -A -o yaml

apiVersion: v1

items:

- apiVersion: local.openebs.io/v1alpha1

kind: LVMVolume

metadata:

creationTimestamp: "2022-11-21T19:01:19Z"

finalizers:

- lvm.openebs.io/finalizer

generation: 3

labels:

kubernetes.io/nodename: mic101-06

name: pvc-b4e5b534-300f-460c-ba01-92e312ce6b40

namespace: lvm

resourceVersion: "171987513"

uid: f95e7f84-ce74-484d-b4d5-d81352d885d3

spec:

capacity: "21474836480"

ownerNodeID: mic101-06

shared: "no"

thinProvision: "no"

vgPattern: ^lvmvg$

volGroup: lvmvg

status:

state: Ready

- apiVersion: local.openebs.io/v1alpha1

kind: LVMVolume

metadata:

creationTimestamp: "2022-11-21T18:11:56Z"

finalizers:

- lvm.openebs.io/finalizer

generation: 3

labels:

kubernetes.io/nodename: mic101-00

name: pvc-f0e890f7-ce1f-4b8f-a55b-a92ba03cacef

namespace: lvm

resourceVersion: "171974076"

uid: a1aad737-100f-41d7-bdb6-96d5d3672ab9

spec:

capacity: "214748364800"

ownerNodeID: mic101-00

shared: "no"

thinProvision: "no"

vgPattern: ^lvmvg$

volGroup: lvmvg

status:

state: Ready

kind: List

metadata:

resourceVersion: ""

Anything else you would like to add:

[Miscellaneous information that will assist in solving the issue.]

I see that it was trying to mount itself to node mic101-06 so I run a little bit of a debug.

If I manually format new volume to xfs, it mounted successfully to the pod.

NAME READY STATUS RESTARTS AGE

mongodb-0 0/2 Init:0/1 0 30m

mongodb-arbiter-0 1/1 Running 1 (92s ago) 4m34s

From host node:

[root@mic101-06 mnt]# mount /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40 /mnt/log/

mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40,

missing codepage or helper program, or other error

In some cases useful info is found in syslog - try

dmesg | tail or so.

[root@mic101-06 mnt]# mkfs.xfs /dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40 -f

meta-data=/dev/mapper/lvmvg-pvc--b4e5b534--300f--460c--ba01--92e312ce6b40 isize=512 agcount=4, agsize=1310720 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=5242880, imaxpct=25

= sunit=0 swidth=0 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=0 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

And back to kubectl:

NAME READY STATUS RESTARTS AGE

mongodb-0 2/2 Running 0 41m

mongodb-1 0/2 Init:0/1 0 24m

mongodb-arbiter-0 1/1 Running 4 (28m ago) 41m

--------------

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 24m default-scheduler 0/8 nodes are available: 8 pod has unbound immediate PersistentVolumeClaims. preemption: 0/8 nodes are available: 8 Preemption is not helpful for scheduling.

Normal Scheduled 24m default-scheduler Successfully assigned mongodb/mongodb-1 to mic101-01

Warning FailedMount 15m (x2 over 18m) kubelet Unable to attach or mount volumes: unmounted volumes=[datadir], unattached volumes=[scripts kube-api-access-2vrfj datadir common-scripts]: timed out waiting for the condition

Warning FailedMount 4m40s (x6 over 22m) kubelet Unable to attach or mount volumes: unmounted volumes=[datadir], unattached volumes=[kube-api-access-2vrfj datadir common-scripts scripts]: timed out waiting for the condition

Warning FailedMount 2m26s (x2 over 9m13s) kubelet Unable to attach or mount volumes: unmounted volumes=[datadir], unattached volumes=[common-scripts scripts kube-api-access-2vrfj datadir]: timed out waiting for the condition

Warning FailedMount 2m17s (x19 over 24m) kubelet MountVolume.SetUp failed for volume "pvc-01b6005c-3728-40ae-be05-88dcc8144c9b" : rpc error: code = Internal desc = failed to format and mount the volume error: mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t xfs -o defaults /dev/lvmvg/pvc-01b6005c-3728-40ae-be05-88dcc8144c9b /var/lib/kubelet/pods/c38073a8-aafc-440f-9dcd-39530bb2ebbc/volumes/kubernetes.io~csi/pvc-01b6005c-3728-40ae-be05-88dcc8144c9b/mount

Output: mount: /var/lib/kubelet/pods/c38073a8-aafc-440f-9dcd-39530bb2ebbc/volumes/kubernetes.io~csi/pvc-01b6005c-3728-40ae-be05-88dcc8144c9b/mount: wrong fs type, bad option, bad superblock on /dev/mapper/lvmvg-pvc--01b6005c--3728--40ae--be05--88dcc8144c9b, missing codepage or helper program, or other error.

--------------

Environment:

- LVM Driver version:

image: openebs/lvm-driver:ci

- Kubernetes version (use

kubectl version):

$ kubectl version

WARNING: This version information is deprecated and will be replaced with the output from kubectl version --short. Use --output=yaml|json to get the full version.

Client Version: version.Info{Major:"1", Minor:"25", GitVersion:"v1.25.3", GitCommit:"434bfd82814af038ad94d62ebe59b133fcb50506", GitTreeState:"clean", BuildDate:"2022-10-12T10:47:25Z", GoVersion:"go1.19.2", Compiler:"gc", Platform:"darwin/amd64"}

Kustomize Version: v4.5.7

Server Version: version.Info{Major:"1", Minor:"25", GitVersion:"v1.25.4", GitCommit:"872a965c6c6526caa949f0c6ac028ef7aff3fb78", GitTreeState:"clean", BuildDate:"2022-11-09T13:29:58Z", GoVersion:"go1.19.3", Compiler:"gc", Platform:"linux/amd64"}

- Kubernetes installer & version: [Kubespray[(https://github.com/kubernetes-sigs/kubespray) master branch

- Cloud provider or hardware configuration:

Bare-metal K8S. VMWare ESXi. CentOS 7.

- OS (e.g. from

/etc/os-release):

NAME="CentOS Linux"

VERSION="7 (Core)"

ID="centos"

ID_LIKE="rhel fedora"

VERSION_ID="7"

PRETTY_NAME="CentOS Linux 7 (Core)"

ANSI_COLOR="0;31"

CPE_NAME="cpe:/o:centos:centos:7"

HOME_URL="https://www.centos.org/"

BUG_REPORT_URL="https://bugs.centos.org/"

CENTOS_MANTISBT_PROJECT="CentOS-7"

CENTOS_MANTISBT_PROJECT_VERSION="7"

REDHAT_SUPPORT_PRODUCT="centos"

REDHAT_SUPPORT_PRODUCT_VERSION="7"

- Host LVM Version:

LVM version: 2.02.187(2)-RHEL7 (2020-03-24)