Ⅰ. Issue Description

I check out the repo and run make, it seems fail out of the box, am I missing something?

Ⅱ. Describe what happened

lee@ubuntu:~/workspace/picloud/open-local$ make

go test -v ./...

? github.com/alibaba/open-local/cmd [no test files]

? github.com/alibaba/open-local/cmd/agent [no test files]

? github.com/alibaba/open-local/cmd/controller [no test files]

? github.com/alibaba/open-local/cmd/csi [no test files]

? github.com/alibaba/open-local/cmd/doc [no test files]

time="2022-06-08T12:15:26+02:00" level=info msg="test noResyncPeriodFunc"

time="2022-06-08T12:15:26+02:00" level=info msg="test noResyncPeriodFunc"

time="2022-06-08T12:15:26+02:00" level=info msg="test noResyncPeriodFunc"

time="2022-06-08T12:15:26+02:00" level=info msg="Waiting for informer caches to sync"

time="2022-06-08T12:15:26+02:00" level=info msg="starting http server on port 23000"

time="2022-06-08T12:15:26+02:00" level=info msg="all informer caches are synced"

=== RUN TestVGWithName

time="2022-06-08T12:15:26+02:00" level=info msg="predicating pod testpod with nodes [[node-192.168.0.1 node-192.168.0.2 node-192.168.0.3 node-192.168.0.4]]"

time="2022-06-08T12:15:26+02:00" level=info msg="predicating pod default/testpod with node node-192.168.0.1"

time="2022-06-08T12:15:26+02:00" level=info msg="got pvc default/pvc-vg as lvm pvc"

time="2022-06-08T12:15:26+02:00" level=info msg="allocating lvm volume for pod default/testpod"

time="2022-06-08T12:15:26+02:00" level=error msg="Insufficient LVM storage on node node-192.168.0.1, vg is ssd, pvc requested 150Gi, vg used 0, vg capacity 100Gi"

time="2022-06-08T12:15:26+02:00" level=info msg="fits: false,failReasons: [Insufficient LVM storage on node node-192.168.0.1, vg is ssd, pvc requested 150Gi, vg used 0, vg capacity 100Gi], err: Insufficient LVM storage on node node-192.168.0.1, vg is ssd, pvc requested 150Gi, vg used 0, vg capacity 100Gi"

time="2022-06-08T12:15:26+02:00" level=info msg="pod=default/testpod, node=node-192.168.0.1,fits: false,failReasons: [Insufficient LVM storage on node node-192.168.0.1, vg is ssd, pvc requested 150Gi, vg used 0, vg capacity 100Gi], err: <nil>"

time="2022-06-08T12:15:26+02:00" level=info msg="node node-192.168.0.1 is not suitable for pod default/testpod, reason: [Insufficient LVM storage on node node-192.168.0.1, vg is ssd, pvc requested 150Gi, vg used 0, vg capacity 100Gi] "

time="2022-06-08T12:15:26+02:00" level=info msg="predicating pod default/testpod with node node-192.168.0.2"

time="2022-06-08T12:15:26+02:00" level=info msg="got pvc default/pvc-vg as lvm pvc"

time="2022-06-08T12:15:26+02:00" level=info msg="allocating lvm volume for pod default/testpod"

time="2022-06-08T12:15:26+02:00" level=info msg="node node-192.168.0.2 is capable of lvm 1 pvcs"

time="2022-06-08T12:15:26+02:00" level=info msg="got pvc default/pvc-vg as lvm pvc"

time="2022-06-08T12:15:26+02:00" level=info msg="fits: true,failReasons: [], err: <nil>"

time="2022-06-08T12:15:26+02:00" level=info msg="pod=default/testpod, node=node-192.168.0.2,fits: true,failReasons: [], err: <nil>"

time="2022-06-08T12:15:26+02:00" level=info msg="predicating pod default/testpod with node node-192.168.0.3"

time="2022-06-08T12:15:26+02:00" level=info msg="got pvc default/pvc-vg as lvm pvc"

time="2022-06-08T12:15:26+02:00" level=info msg="allocating lvm volume for pod default/testpod"

time="2022-06-08T12:15:26+02:00" level=info msg="node node-192.168.0.3 is capable of lvm 1 pvcs"

time="2022-06-08T12:15:26+02:00" level=info msg="got pvc default/pvc-vg as lvm pvc"

time="2022-06-08T12:15:26+02:00" level=info msg="fits: true,failReasons: [], err: <nil>"

time="2022-06-08T12:15:26+02:00" level=info msg="pod=default/testpod, node=node-192.168.0.3,fits: true,failReasons: [], err: <nil>"

time="2022-06-08T12:15:26+02:00" level=info msg="predicating pod default/testpod with node node-192.168.0.4"

time="2022-06-08T12:15:26+02:00" level=info msg="got pvc default/pvc-vg as lvm pvc"

time="2022-06-08T12:15:26+02:00" level=info msg="allocating lvm volume for pod default/testpod"

time="2022-06-08T12:15:26+02:00" level=error msg="no vg(LVM) named ssd in node node-192.168.0.4"

time="2022-06-08T12:15:26+02:00" level=info msg="fits: false,failReasons: [no vg(LVM) named ssd in node node-192.168.0.4], err: no vg(LVM) named ssd in node node-192.168.0.4"

time="2022-06-08T12:15:26+02:00" level=info msg="pod=default/testpod, node=node-192.168.0.4,fits: false,failReasons: [no vg(LVM) named ssd in node node-192.168.0.4], err: <nil>"

time="2022-06-08T12:15:26+02:00" level=info msg="node node-192.168.0.4 is not suitable for pod default/testpod, reason: [no vg(LVM) named ssd in node node-192.168.0.4] "

unexpected fault address 0x0

fatal error: fault

[signal SIGSEGV: segmentation violation code=0x80 addr=0x0 pc=0x46845f]

goroutine 91 [running]:

runtime.throw({0x178205e?, 0x18?})

/usr/local/go/src/runtime/panic.go:992 +0x71 fp=0xc0004d71e8 sp=0xc0004d71b8 pc=0x4380b1

runtime.sigpanic()

/usr/local/go/src/runtime/signal_unix.go:825 +0x305 fp=0xc0004d7238 sp=0xc0004d71e8 pc=0x44e485

aeshashbody()

/usr/local/go/src/runtime/asm_amd64.s:1343 +0x39f fp=0xc0004d7240 sp=0xc0004d7238 pc=0x46845f

runtime.mapiternext(0xc000788780)

/usr/local/go/src/runtime/map.go:934 +0x2cb fp=0xc0004d72b0 sp=0xc0004d7240 pc=0x411beb

runtime.mapiterinit(0x0?, 0x8?, 0x1?)

/usr/local/go/src/runtime/map.go:861 +0x228 fp=0xc0004d72d0 sp=0xc0004d72b0 pc=0x4118c8

reflect.mapiterinit(0xc000039cf8?, 0xc0004d7358?, 0x461365?)

/usr/local/go/src/runtime/map.go:1373 +0x19 fp=0xc0004d72f8 sp=0xc0004d72d0 pc=0x464b79

github.com/modern-go/reflect2.(*UnsafeMapType).UnsafeIterate(...)

/home/lee/workspace/picloud/open-local/vendor/github.com/modern-go/reflect2/unsafe_map.go:112

github.com/json-iterator/go.(*sortKeysMapEncoder).Encode(0xc00058f230, 0xc000497f00, 0xc000039ce0)

/home/lee/workspace/picloud/open-local/vendor/github.com/json-iterator/go/reflect_map.go:291 +0x225 fp=0xc0004d7468 sp=0xc0004d72f8 pc=0x8553e5

github.com/json-iterator/go.(*structFieldEncoder).Encode(0xc00058f350, 0x1436da0?, 0xc000039ce0)

/home/lee/workspace/picloud/open-local/vendor/github.com/json-iterator/go/reflect_struct_encoder.go:110 +0x56 fp=0xc0004d74e0 sp=0xc0004d7468 pc=0x862b36

github.com/json-iterator/go.(*structEncoder).Encode(0xc00058f3e0, 0x0?, 0xc000039ce0)

/home/lee/workspace/picloud/open-local/vendor/github.com/json-iterator/go/reflect_struct_encoder.go:158 +0x765 fp=0xc0004d75c8 sp=0xc0004d74e0 pc=0x863545

github.com/json-iterator/go.(*OptionalEncoder).Encode(0xc00013bb80?, 0x0?, 0x0?)

/home/lee/workspace/picloud/open-local/vendor/github.com/json-iterator/go/reflect_optional.go:70 +0xa4 fp=0xc0004d7618 sp=0xc0004d75c8 pc=0x85a744

github.com/json-iterator/go.(*onePtrEncoder).Encode(0xc0004b3210, 0xc000497ef0, 0xc000497f50?)

/home/lee/workspace/picloud/open-local/vendor/github.com/json-iterator/go/reflect.go:219 +0x82 fp=0xc0004d7650 sp=0xc0004d7618 pc=0x84d7c2

github.com/json-iterator/go.(*Stream).WriteVal(0xc000039ce0, {0x158a3e0, 0xc000497ef0})

/home/lee/workspace/picloud/open-local/vendor/github.com/json-iterator/go/reflect.go:98 +0x158 fp=0xc0004d76c0 sp=0xc0004d7650 pc=0x84cad8

github.com/json-iterator/go.(*frozenConfig).Marshal(0xc00013bb80, {0x158a3e0, 0xc000497ef0})

/home/lee/workspace/picloud/open-local/vendor/github.com/json-iterator/go/config.go:299 +0xc9 fp=0xc0004d7758 sp=0xc0004d76c0 pc=0x843d89

github.com/alibaba/open-local/pkg/scheduler/server.PredicateRoute.func1({0x19bfee0, 0xc00019c080}, 0xc000318000, {0x203000?, 0xc00062b928?, 0xc00062b84d?})

/home/lee/workspace/picloud/open-local/pkg/scheduler/server/routes.go:83 +0x326 fp=0xc0004d7878 sp=0xc0004d7758 pc=0x132d5e6

github.com/alibaba/open-local/pkg/scheduler/server.DebugLogging.func1({0x19cafb0?, 0xc0005a80e0}, 0xc000056150?, {0x0, 0x0, 0x0})

/home/lee/workspace/picloud/open-local/pkg/scheduler/server/routes.go:217 +0x267 fp=0xc0004d7988 sp=0xc0004d7878 pc=0x132e4a7

github.com/julienschmidt/httprouter.(*Router).ServeHTTP(0xc0000b0de0, {0x19cafb0, 0xc0005a80e0}, 0xc000318000)

/home/lee/workspace/picloud/open-local/vendor/github.com/julienschmidt/httprouter/router.go:387 +0x82b fp=0xc0004d7a98 sp=0xc0004d7988 pc=0x12d61ab

net/http.serverHandler.ServeHTTP({0x19bc700?}, {0x19cafb0, 0xc0005a80e0}, 0xc000318000)

/usr/local/go/src/net/http/server.go:2916 +0x43b fp=0xc0004d7b58 sp=0xc0004d7a98 pc=0x7e87fb

net/http.(*conn).serve(0xc0001da3c0, {0x19cbab0, 0xc0001b68a0})

/usr/local/go/src/net/http/server.go:1966 +0x5d7 fp=0xc0004d7fb8 sp=0xc0004d7b58 pc=0x7e3cb7

net/http.(*Server).Serve.func3()

/usr/local/go/src/net/http/server.go:3071 +0x2e fp=0xc0004d7fe0 sp=0xc0004d7fb8 pc=0x7e914e

runtime.goexit()

/usr/local/go/src/runtime/asm_amd64.s:1571 +0x1 fp=0xc0004d7fe8 sp=0xc0004d7fe0 pc=0x46b061

created by net/http.(*Server).Serve

/usr/local/go/src/net/http/server.go:3071 +0x4db

goroutine 1 [chan receive]:

testing.(*T).Run(0xc000103ba0, {0x178cc75?, 0x516ac5?}, 0x18541b0)

/usr/local/go/src/testing/testing.go:1487 +0x37a

testing.runTests.func1(0xc0001b69c0?)

/usr/local/go/src/testing/testing.go:1839 +0x6e

testing.tRunner(0xc000103ba0, 0xc00064bcd8)

/usr/local/go/src/testing/testing.go:1439 +0x102

testing.runTests(0xc00050a0a0?, {0x2540700, 0x7, 0x7}, {0x7fa22c405a68?, 0x40?, 0x2557740?})

/usr/local/go/src/testing/testing.go:1837 +0x457

testing.(*M).Run(0xc00050a0a0)

/usr/local/go/src/testing/testing.go:1719 +0x5d9

main.main()

_testmain.go:59 +0x1aa

goroutine 19 [chan receive]:

k8s.io/klog/v2.(*loggingT).flushDaemon(0x0?)

/home/lee/workspace/picloud/open-local/vendor/k8s.io/klog/v2/klog.go:1169 +0x6a

created by k8s.io/klog/v2.init.0

/home/lee/workspace/picloud/open-local/vendor/k8s.io/klog/v2/klog.go:417 +0xf6

goroutine 92 [IO wait]:

internal/poll.runtime_pollWait(0x7fa204607b38, 0x72)

/usr/local/go/src/runtime/netpoll.go:302 +0x89

internal/poll.(*pollDesc).wait(0xc0003c6100?, 0xc00050c2e1?, 0x0)

/usr/local/go/src/internal/poll/fd_poll_runtime.go:83 +0x32

internal/poll.(*pollDesc).waitRead(...)

/usr/local/go/src/internal/poll/fd_poll_runtime.go:88

internal/poll.(*FD).Read(0xc0003c6100, {0xc00050c2e1, 0x1, 0x1})

/usr/local/go/src/internal/poll/fd_unix.go:167 +0x25a

net.(*netFD).Read(0xc0003c6100, {0xc00050c2e1?, 0xc000613628?, 0xc00061e000?})

/usr/local/go/src/net/fd_posix.go:55 +0x29

net.(*conn).Read(0xc000612180, {0xc00050c2e1?, 0xc0005147a0?, 0x985846?})

/usr/local/go/src/net/net.go:183 +0x45

net/http.(*connReader).backgroundRead(0xc00050c2d0)

/usr/local/go/src/net/http/server.go:672 +0x3f

created by net/http.(*connReader).startBackgroundRead

/usr/local/go/src/net/http/server.go:668 +0xca

goroutine 43 [select]:

net/http.(*persistConn).roundTrip(0xc00056a360, 0xc0006420c0)

/usr/local/go/src/net/http/transport.go:2620 +0x974

net/http.(*Transport).roundTrip(0x25410e0, 0xc0004c6600)

/usr/local/go/src/net/http/transport.go:594 +0x7c9

net/http.(*Transport).RoundTrip(0x40f405?, 0x19b3900?)

/usr/local/go/src/net/http/roundtrip.go:17 +0x19

net/http.send(0xc0004c6600, {0x19b3900, 0x25410e0}, {0x172b2a0?, 0x178c601?, 0x0?})

/usr/local/go/src/net/http/client.go:252 +0x5d8

net/http.(*Client).send(0x2556ec0, 0xc0004c6600, {0xd?, 0x1788f4f?, 0x0?})

/usr/local/go/src/net/http/client.go:176 +0x9b

net/http.(*Client).do(0x2556ec0, 0xc0004c6600)

/usr/local/go/src/net/http/client.go:725 +0x8f5

net/http.(*Client).Do(...)

/usr/local/go/src/net/http/client.go:593

net/http.(*Client).Post(0x17b1437?, {0xc000492480?, 0xc00054bdc8?}, {0x178f761, 0x10}, {0x19b0fe0?, 0xc0001b6a20?})

/usr/local/go/src/net/http/client.go:858 +0x148

net/http.Post(...)

/usr/local/go/src/net/http/client.go:835

github.com/alibaba/open-local/cmd/scheduler.predicateFunc(0xc0000f9800, {0x253ebe0, 0x4, 0x4})

/home/lee/workspace/picloud/open-local/cmd/scheduler/extender_test.go:348 +0x1e8

github.com/alibaba/open-local/cmd/scheduler.TestVGWithName(0x4082b9?)

/home/lee/workspace/picloud/open-local/cmd/scheduler/extender_test.go:135 +0x17e

testing.tRunner(0xc000103d40, 0x18541b0)

/usr/local/go/src/testing/testing.go:1439 +0x102

created by testing.(*T).Run

/usr/local/go/src/testing/testing.go:1486 +0x35f

goroutine 87 [IO wait]:

internal/poll.runtime_pollWait(0x7fa204607d18, 0x72)

/usr/local/go/src/runtime/netpoll.go:302 +0x89

internal/poll.(*pollDesc).wait(0xc00003a580?, 0xc000064000?, 0x0)

/usr/local/go/src/internal/poll/fd_poll_runtime.go:83 +0x32

internal/poll.(*pollDesc).waitRead(...)

/usr/local/go/src/internal/poll/fd_poll_runtime.go:88

internal/poll.(*FD).Accept(0xc00003a580)

/usr/local/go/src/internal/poll/fd_unix.go:614 +0x22c

net.(*netFD).accept(0xc00003a580)

/usr/local/go/src/net/fd_unix.go:172 +0x35

net.(*TCPListener).accept(0xc0001301e0)

/usr/local/go/src/net/tcpsock_posix.go:139 +0x28

net.(*TCPListener).Accept(0xc0001301e0)

/usr/local/go/src/net/tcpsock.go:288 +0x3d

net/http.(*Server).Serve(0xc0000dc2a0, {0x19cada0, 0xc0001301e0})

/usr/local/go/src/net/http/server.go:3039 +0x385

net/http.(*Server).ListenAndServe(0xc0000dc2a0)

/usr/local/go/src/net/http/server.go:2968 +0x7d

net/http.ListenAndServe(...)

/usr/local/go/src/net/http/server.go:3222

github.com/alibaba/open-local/pkg/scheduler/server.(*ExtenderServer).InitRouter.func1()

/home/lee/workspace/picloud/open-local/pkg/scheduler/server/web.go:185 +0x157

created by github.com/alibaba/open-local/pkg/scheduler/server.(*ExtenderServer).InitRouter

/home/lee/workspace/picloud/open-local/pkg/scheduler/server/web.go:182 +0x478

goroutine 49 [IO wait]:

internal/poll.runtime_pollWait(0x7fa204607c28, 0x72)

/usr/local/go/src/runtime/netpoll.go:302 +0x89

internal/poll.(*pollDesc).wait(0xc00003a800?, 0xc000639000?, 0x0)

/usr/local/go/src/internal/poll/fd_poll_runtime.go:83 +0x32

internal/poll.(*pollDesc).waitRead(...)

/usr/local/go/src/internal/poll/fd_poll_runtime.go:88

internal/poll.(*FD).Read(0xc00003a800, {0xc000639000, 0x1000, 0x1000})

/usr/local/go/src/internal/poll/fd_unix.go:167 +0x25a

net.(*netFD).Read(0xc00003a800, {0xc000639000?, 0x17814b4?, 0x0?})

/usr/local/go/src/net/fd_posix.go:55 +0x29

net.(*conn).Read(0xc000495a38, {0xc000639000?, 0x19ce530?, 0xc000370ea0?})

/usr/local/go/src/net/net.go:183 +0x45

net/http.(*persistConn).Read(0xc00056a360, {0xc000639000?, 0x40757d?, 0x60?})

/usr/local/go/src/net/http/transport.go:1929 +0x4e

bufio.(*Reader).fill(0xc000522a80)

/usr/local/go/src/bufio/bufio.go:106 +0x103

bufio.(*Reader).Peek(0xc000522a80, 0x1)

/usr/local/go/src/bufio/bufio.go:144 +0x5d

net/http.(*persistConn).readLoop(0xc00056a360)

/usr/local/go/src/net/http/transport.go:2093 +0x1ac

created by net/http.(*Transport).dialConn

/usr/local/go/src/net/http/transport.go:1750 +0x173e

goroutine 178 [select]:

net/http.(*persistConn).writeLoop(0xc00056a360)

/usr/local/go/src/net/http/transport.go:2392 +0xf5

created by net/http.(*Transport).dialConn

/usr/local/go/src/net/http/transport.go:1751 +0x1791

FAIL github.com/alibaba/open-local/cmd/scheduler 0.177s

? github.com/alibaba/open-local/cmd/version [no test files]

? github.com/alibaba/open-local/pkg [no test files]

? github.com/alibaba/open-local/pkg/agent/common [no test files]

=== RUN TestNewAgent

Ⅲ. Describe what you expected to happen

make should run through.

Ⅳ. How to reproduce it (as minimally and precisely as possible)

- git clone https://github.com/alibaba/open-local.git

- cd open-local

- make 4.

- failed

Ⅴ. Anything else we need to know?

Ⅵ. Environment:

- Open-Local version: main branch

- OS (e.g. from /etc/os-release): ubuntu 22.04

- Kernel (e.g.

uname -a): 5.15.0-33

- Install tools:

- Others:

2、scheduler-policy-config.json

2、scheduler-policy-config.json

3、driver-registrar Logs

3、driver-registrar Logs

4、Agent Logs

4、Agent Logs

5、scheduler-extender Logs

5、scheduler-extender Logs

6、NodeLocalStorageInitConfig

6、NodeLocalStorageInitConfig

7、Raw Device Of Worker

7、Raw Device Of Worker

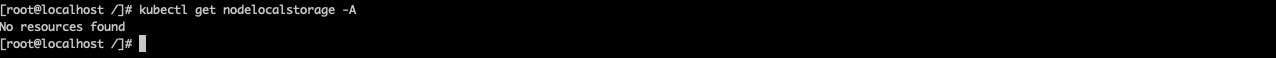

At this time, the cache of scheduler-extender is shown in Figure 3 and 4.

At this time, the cache of scheduler-extender is shown in Figure 3 and 4.