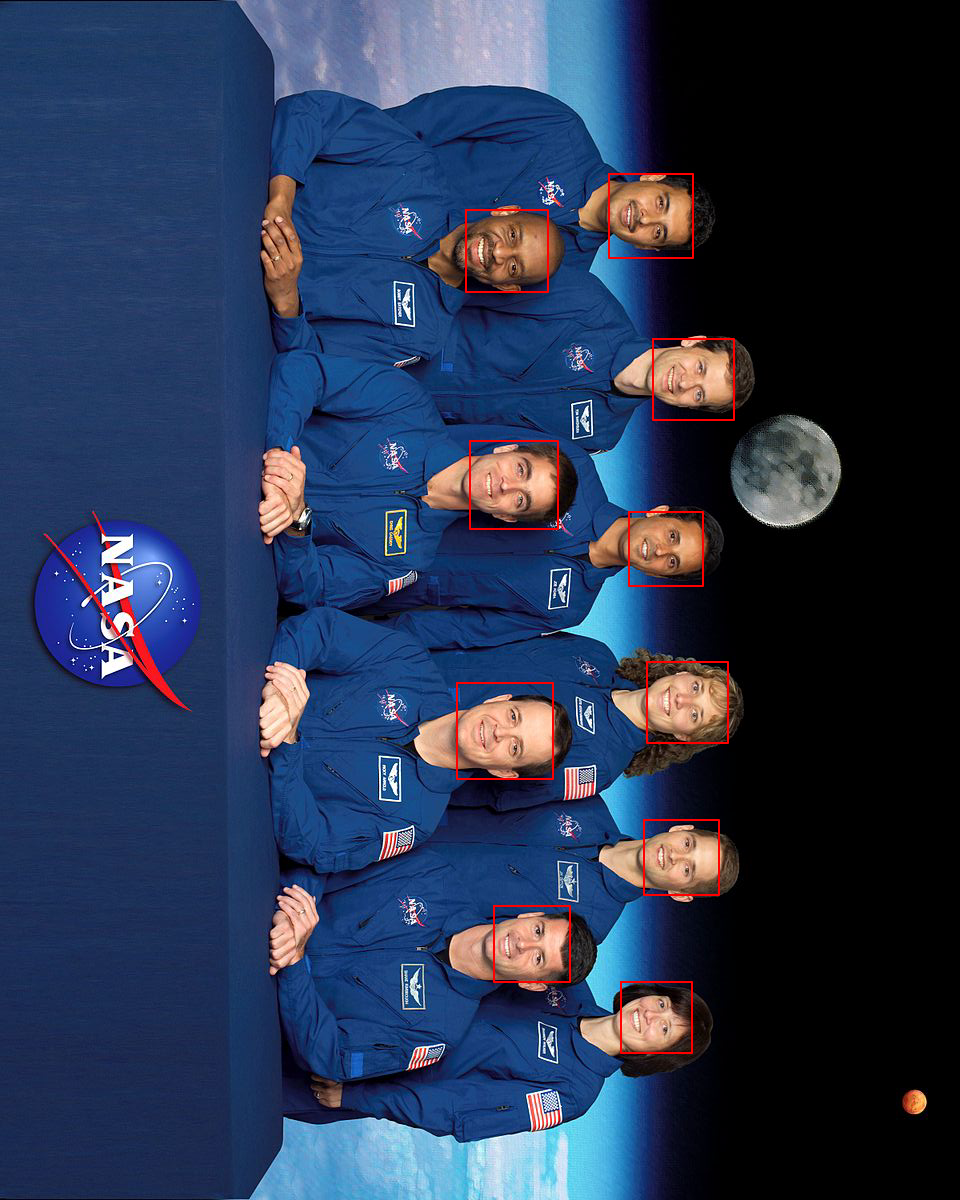

Pigo is a pure Go face detection, pupil/eyes localization and facial landmark points detection library based on Pixel Intensity Comparison-based Object detection paper (https://arxiv.org/pdf/1305.4537.pdf).

| Rectangle face marker | Circle face marker |

|---|---|

|

|

Motivation

The reason why Pigo has been developed is because almost all of the currently existing solutions for face detection in the Go ecosystem are purely bindings to some C/C++ libraries like OpenCV or dlib, but calling a C program trough cgo introduces huge latencies and implies a significant trade-off in terms of performance. Also in many cases installing OpenCV on various platforms is cumbersome.

The Pigo library does not require any additional modules or third party applications to be installed, however in case you wish to run the library in a real time, webcam based desktop application you might need to have Python and OpenCV installed. Head over to this subtopic for more explanation.

Key features

- Does not require OpenCV or any 3rd party modules to be installed

- High processing speed

- There is no need for image preprocessing prior detection

- There is no need for the computation of integral images, image pyramid, HOG pyramid or any other similar data structure

- The face detection is based on pixel intensity comparison encoded in the binary file tree structure

- Fast detection of in-plane rotated faces

- The library can detect even faces with eyeglasses

- Pupils/eyes localization

- Facial landmark points detection

- Webassembly support

🎉

Todo

- Object detection and description

The library can also detect in plane rotated faces. For this reason a new -angle parameter has been included into the command line utility. The command below will generate the following result (see the table below for all the supported options).

$ pigo -in input.jpg -out output.jpg -cf cascade/facefinder -angle=0.8 -iou=0.01

| Input file | Output file |

|---|---|

|

|

Note: In case of in plane rotated faces the angle value should be adapted to the provided image.

Pupils / eyes localization

Starting from v1.2.0 Pigo offers pupils/eyes localization capabilities. The implementation is based on Eye pupil localization with an ensemble of randomized trees.

Check out this example for a realtime demo: https://github.com/esimov/pigo/tree/master/examples/puploc

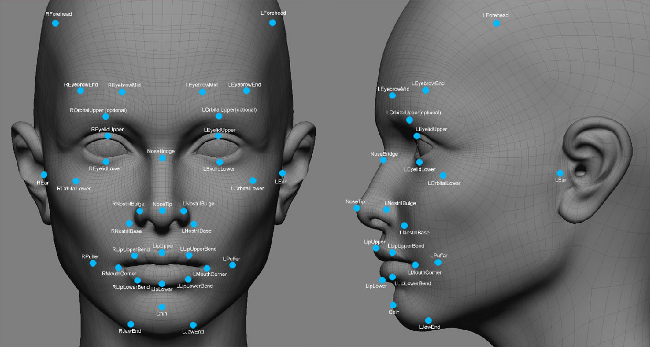

Facial landmark points detection

v1.3.0 marks a new milestone in the library evolution, Pigo being able for facial landmark points detection. The implementation is based on Fast Localization of Facial Landmark Points.

Check out this example for a realtime demo: https://github.com/esimov/pigo/tree/master/examples/facial_landmark

Install

Install Go, set your GOPATH, and make sure $GOPATH/bin is on your PATH.

$ export GOPATH="$HOME/go"

$ export PATH="$PATH:$GOPATH/bin"

Next download the project and build the binary file.

$ go get -u -f github.com/esimov/pigo/cmd/pigo

$ go install

Binary releases

In case you do not have installed or do not wish to install Go, you can obtain the binary file from the releases folder.

The library can be accessed as a snapcraft function too.

API

Below is a minimal example of using the face detection API.

First you need to load and parse the binary classifier, then convert the image to grayscale mode, and finally to run the cascade function which returns a slice containing the row, column, scale and the detection score.

cascadeFile, err := ioutil.ReadFile("/path/to/cascade/file")

if err != nil {

log.Fatalf("Error reading the cascade file: %v", err)

}

src, err := pigo.GetImage("/path/to/image")

if err != nil {

log.Fatalf("Cannot open the image file: %v", err)

}

pixels := pigo.RgbToGrayscale(src)

cols, rows := src.Bounds().Max.X, src.Bounds().Max.Y

cParams := pigo.CascadeParams{

MinSize: 20,

MaxSize: 1000,

ShiftFactor: 0.1,

ScaleFactor: 1.1,

ImageParams: pigo.ImageParams{

Pixels: pixels,

Rows: rows,

Cols: cols,

Dim: cols,

},

}

pigo := pigo.NewPigo()

// Unpack the binary file. This will return the number of cascade trees,

// the tree depth, the threshold and the prediction from tree's leaf nodes.

classifier, err := pigo.Unpack(cascadeFile)

if err != nil {

log.Fatalf("Error reading the cascade file: %s", err)

}

angle := 0.0 // cascade rotation angle. 0.0 is 0 radians and 1.0 is 2*pi radians

// Run the classifier over the obtained leaf nodes and return the detection results.

// The result contains quadruplets representing the row, column, scale and detection score.

dets := classifier.RunCascade(cParams, angle)

// Calculate the intersection over union (IoU) of two clusters.

dets = classifier.ClusterDetections(dets, 0.2)

A note about imports: in order to decode the generated image you have to import image/jpeg or image/png (depending on the provided image type) as in the following example, otherwise you will get a "Image: Unknown format" error.

import (

_ "image/jpeg"

pigo "github.com/esimov/pigo/core"

)

Usage

A command line utility is bundled into the library.

$ pigo -in input.jpg -out out.jpg -cf cascade/facefinder

Supported flags:

$ pigo --help

┌─┐┬┌─┐┌─┐

├─┘││ ┬│ │

┴ ┴└─┘└─┘

Go (Golang) Face detection library.

Version: 1.4.2

-angle float

0.0 is 0 radians and 1.0 is 2*pi radians

-cf string

Cascade binary file

-flpc string

Facial landmark points cascade directory

-in string

Source image (default "-")

-iou float

Intersection over union (IoU) threshold (default 0.2)

-json string

Output the detection points into a json file

-mark

Mark detected eyes (default true)

-marker string

Detection marker: rect|circle|ellipse (default "rect")

-max int

Maximum size of face (default 1000)

-min int

Minimum size of face (default 20)

-out string

Destination image (default "-")

-plc string

Pupils/eyes localization cascade file

-scale float

Scale detection window by percentage (default 1.1)

-shift float

Shift detection window by percentage (default 0.1)

Important notice: In case you wish to run also the pupil/eyes localization, then you need to use the plc flag and provide a valid path to the pupil localization cascade file. The same applies for facial landmark points detection, only that this time the parameter accepted by the flpc flag is a directory to the facial landmark point cascade files found under cascades/lps.

CLI command examples

You can also use the stdin and stdout pipe commands:

$ cat input/source.jpg | pigo > -in - -out - >out.jpg -cf=/path/to/cascade

in and out default to - so you can also use:

$ cat input/source.jpg | pigo >out.jpg -cf=/path/to/cascade

$ pigo -out out.jpg < input/source.jpg -cf=/path/to/cascade

Using the empty string as value for the -out flag will skip the image generation part. This, combined with the -json flag will encode the detection results into the specified json file. You can also use the pipe - value combined with the -json flag to output the detection coordinates to the standard (stdout) output.

Real time face detection (running as a shared object)

In case you wish to test the library real time face detection capabilities, the examples folder contains a few demos written in Python.

But why Python you might ask? Because the Go ecosystem is (still) missing a cross platform and system independent library for accessing the webcam.

In the Python program we are accessing the webcam and transferring the pixel data as a byte array through cgo as a shared object to the Go program where the core face detection is happening. But as you can imagine this operation is not cost effective, resulting in lower frame rates than the library is capable of.

These demos were created before the Webassembly port and were kept for showing the way how to integrate the Pigo library into other programming languages.

WASM (Webassembly) support

🎉

Important note: in order to run the Webassembly demos at least Go 1.13 is required!

Starting from version v1.4.0 the library has been ported to WASM. This gives the library a huge performance gain in terms of real time face detection capabilities.

WASM demo

To run the wasm demo select the wasm folder and type make.

For more details check the subpage description: https://github.com/esimov/pigo/tree/master/wasm.

Benchmark results

Below are the benchmark results obtained running Pigo against GoCV using the same conditions.

BenchmarkGoCV-4 3 414122553 ns/op 704 B/op 1 allocs/op

BenchmarkPIGO-4 10 173664832 ns/op 0 B/op 0 allocs/op

PASS

ok github.com/esimov/gocv-test 4.530s

The code used for the above test can be found under the following link: https://github.com/esimov/pigo-gocv-benchmark

Author

- Endre Simo (@simo_endre)

License

Copyright © 2019 Endre Simo

This software is distributed under the MIT license. See the LICENSE file for the full license text.