crawlergo

A powerful browser crawler for web vulnerability scanners

English Document | 中文文档

crawlergo is a browser crawler that uses chrome headless mode for URL collection. It hooks key positions of the whole web page with DOM rendering stage, automatically fills and submits forms, with intelligent JS event triggering, and collects as many entries exposed by the website as possible. The built-in URL de-duplication module filters out a large number of pseudo-static URLs, still maintains a fast parsing and crawling speed for large websites, and finally gets a high-quality collection of request results.

crawlergo currently supports the following features:

- chrome browser environment rendering

- Intelligent form filling, automated submission

- Full DOM event collection with automated triggering

- Smart URL de-duplication to remove most duplicate requests

- Intelligent analysis of web pages and collection of URLs, including javascript file content, page comments, robots.txt files and automatic Fuzz of common paths

- Support Host binding, automatically fix and add Referer

- Support browser request proxy

- Support pushing the results to passive web vulnerability scanners

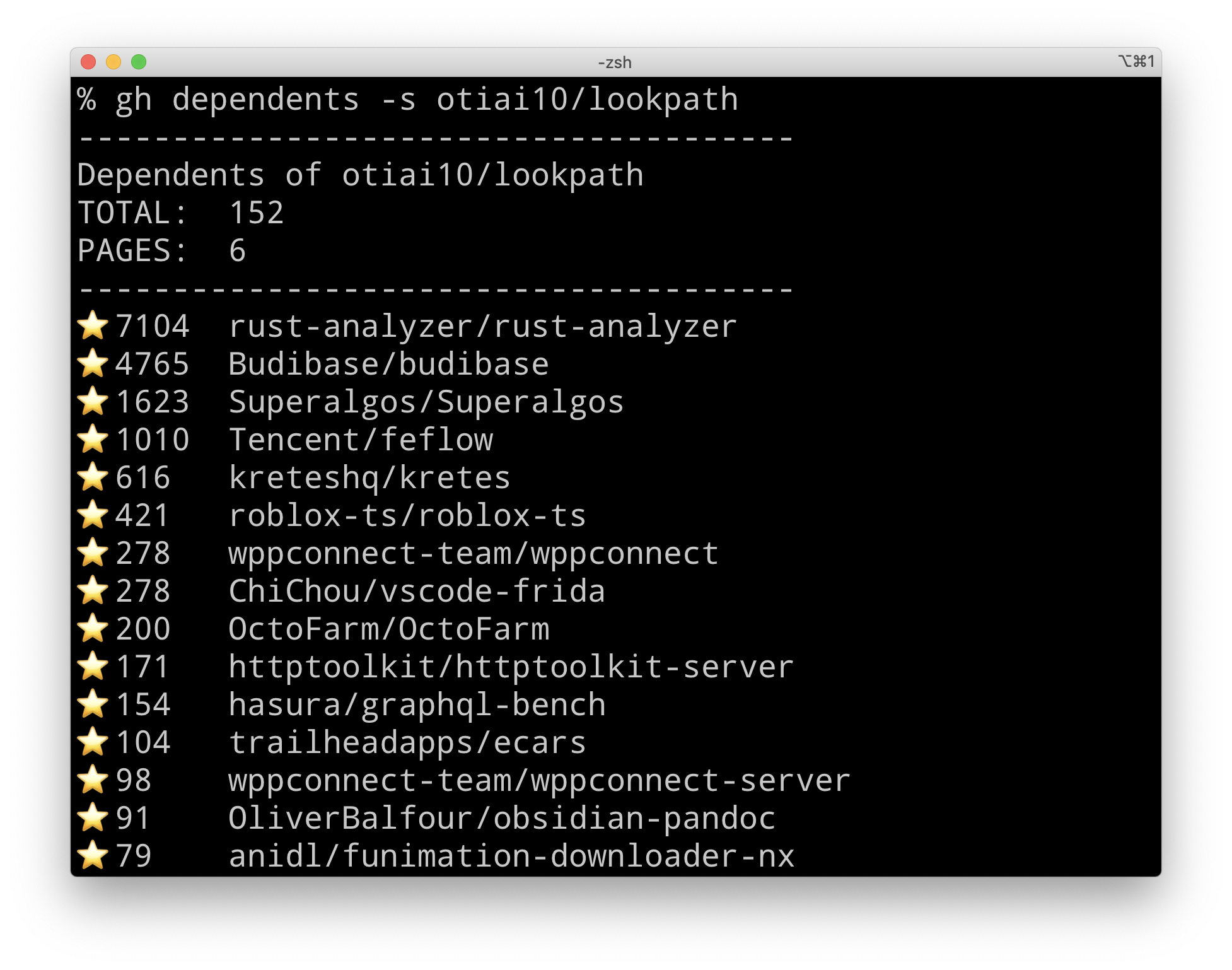

Screenshot

Installation

Please read and confirm disclaimer carefully before installing and using。

Build

cd crawlergo/cmd/crawlergo

go build crawlergo_cmd.go

- crawlergo relies only on the chrome environment to run, go to download for the new version of chromium, or just click to download Linux version 79.

- Go to download page for the latest version of crawlergo and extract it to any directory. If you are on linux or macOS, please give crawlergo executable permissions (+x).

- Or you can modify the code and build it yourself.

If you are using a linux system and chrome prompts you with missing dependencies, please see TroubleShooting below

Quick Start

Go!

Assuming your chromium installation directory is /tmp/chromium/, set up 10 tabs open at the same time and crawl the testphp.vulnweb.com:

./crawlergo -c /tmp/chromium/chrome -t 10 http://testphp.vulnweb.com/

Using Proxy

./crawlergo -c /tmp/chromium/chrome -t 10 --request-proxy socks5://127.0.0.1:7891 http://testphp.vulnweb.com/

Calling crawlergo with python

By default, crawlergo prints the results directly on the screen. We next set the output mode to json, and the sample code for calling it using python is as follows:

#!/usr/bin/python3

# coding: utf-8

import simplejson

import subprocess

def main():

target = "http://testphp.vulnweb.com/"

cmd = ["./crawlergo", "-c", "/tmp/chromium/chrome", "-o", "json", target]

rsp = subprocess.Popen(cmd, stdout=subprocess.PIPE, stderr=subprocess.PIPE)

output, error = rsp.communicate()

# "--[Mission Complete]--" is the end-of-task separator string

result = simplejson.loads(output.decode().split("--[Mission Complete]--")[1])

req_list = result["req_list"]

print(req_list[0])

if __name__ == '__main__':

main()

Crawl Results

When the output mode is set to json, the returned result, after JSON deserialization, contains four parts:

all_req_list: All requests found during this crawl task, containing any resource type from other domains.req_list:Returns the current domain results of this crawl task, pseudo-statically de-duplicated, without static resource links. It is a subset ofall_req_list.all_domain_list:List of all domains found.sub_domain_list:List of subdomains found.

Examples

crawlergo returns the full request and URL, which can be used in a variety of ways:

-

Used in conjunction with other passive web vulnerability scanners

First, start a passive scanner and set the listening address to:

http://127.0.0.1:1234/Next, assuming crawlergo is on the same machine as the scanner, start crawlergo and set the parameters:

--push-to-proxy http://127.0.0.1:1234/ -

Host binding (not available for high version chrome) (example)

-

Custom Cookies (example)

-

Regularly clean up zombie processes generated by crawlergo (example) , contributed by @ring04h

Bypass headless detect

crawlergo can bypass headless mode detection by default.

https://intoli.com/blog/not-possible-to-block-chrome-headless/chrome-headless-test.html

TroubleShooting

-

'Fetch.enable' wasn't found

Fetch is a feature supported by the new version of chrome, if this error occurs, it means your version is too low, please upgrade the chrome version.

-

chrome runs with missing dependencies such as xxx.so

// Ubuntu apt-get install -yq --no-install-recommends \ libasound2 libatk1.0-0 libc6 libcairo2 libcups2 libdbus-1-3 \ libexpat1 libfontconfig1 libgcc1 libgconf-2-4 libgdk-pixbuf2.0-0 libglib2.0-0 libgtk-3-0 libnspr4 \ libpango-1.0-0 libpangocairo-1.0-0 libstdc++6 libx11-6 libx11-xcb1 libxcb1 \ libxcursor1 libxdamage1 libxext6 libxfixes3 libxi6 libxrandr2 libxrender1 libxss1 libxtst6 libnss3 // CentOS 7 sudo yum install pango.x86_64 libXcomposite.x86_64 libXcursor.x86_64 libXdamage.x86_64 libXext.x86_64 libXi.x86_64 \ libXtst.x86_64 cups-libs.x86_64 libXScrnSaver.x86_64 libXrandr.x86_64 GConf2.x86_64 alsa-lib.x86_64 atk.x86_64 gtk3.x86_64 \ ipa-gothic-fonts xorg-x11-fonts-100dpi xorg-x11-fonts-75dpi xorg-x11-utils xorg-x11-fonts-cyrillic xorg-x11-fonts-Type1 xorg-x11-fonts-misc -y sudo yum update nss -y -

Run prompt Navigation timeout / browser not found / don't know correct browser executable path

Make sure the browser executable path is configured correctly, type:

chrome://versionin the address bar, and find the executable file path:

Parameters

Required parameters

--chromium-path Path, -c PathThe path to the chrome executable. (Required)

Basic parameters

--custom-headers HeadersCustomize the HTTP header. Please pass in the data after JSON serialization, this is globally defined and will be used for all requests. (Default: null)--post-data PostData, -d PostDataPOST data. (Default: null)--max-crawled-count Number, -m NumberThe maximum number of tasks for crawlers to avoid long crawling time due to pseudo-static. (Default: 200)--filter-mode Mode, -f ModeFiltering mode,simple: only static resources and duplicate requests are filtered.smart: with the ability to filter pseudo-static.strict: stricter pseudo-static filtering rules. (Default: smart)--output-mode value, -o valueResult output mode,console: print the glorified results directly to the screen.json: print the json serialized string of all results.none: don't print the output. (Default: console)--output-json filepathWrite the result to the specified file after JSON serializing it. (Default: null)--request-proxy proxyAddresssocks5 proxy address, all network requests from crawlergo and chrome browser are sent through the proxy. (Default: null)

Expand input URL

--fuzz-pathUse the built-in dictionary for path fuzzing. (Default: false)--fuzz-path-dictCustomize the Fuzz path by passing in a dictionary file path, e.g. /home/user/fuzz_dir.txt, each line of the file represents a path to be fuzzed. (Default: null)--robots-pathResolve the path from the /robots.txt file. (Default: false)

Form auto-fill

--ignore-url-keywords, -iukURL keyword that you don't want to visit, generally used to exclude logout links when customizing cookies. Usage:-iuk logout -iuk exit. (default: "logout", "quit", "exit")--form-values, -fvCustomize the value of the form fill, set by text type. Support definition types: default, mail, code, phone, username, password, qq, id_card, url, date and number. Text types are identified by the four attribute value keywordsid,name,class,typeof the input box label. For example, define the mailbox input box to be automatically filled with A and the password input box to be automatically filled with B,-fv mail=A -fv password=B.Where default represents the fill value when the text type is not recognized, as "Cralwergo". (Default: Cralwergo)--form-keyword-values, -fkvCustomize the value of the form fill, set by keyword fuzzy match. The keyword matches the four attribute values ofid,name,class,typeof the input box label. For example, fuzzy match the pass keyword to fill 123456 and the user keyword to fill admin,-fkv user=admin -fkv pass=123456. (Default: Cralwergo)

Advanced settings for the crawling process

--incognito-context, -iBrowser start incognito mode. (Default: true)--max-tab-count Number, -t NumberThe maximum number of tabs the crawler can open at the same time. (Default: 8)--tab-run-timeout TimeoutMaximum runtime for a single tab page. (Default: 20s)--wait-dom-content-loaded-timeout TimeoutThe maximum timeout to wait for the page to finish loading. (Default: 5s)--event-trigger-interval IntervalThe interval when the event is triggered automatically, generally used in the case of slow target network and DOM update conflicts that lead to URL miss capture. (Default: 100ms)--event-trigger-mode ValueDOM event auto-triggered mode, withasyncandsync, for URL miss-catching caused by DOM update conflicts. (Default: async)--before-exit-delayDelay exit to close chrome at the end of a single tab task. Used to wait for partial DOM updates and XHR requests to be captured. (Default: 1s)

Other

--push-to-proxyThe listener address of the crawler result to be received, usually the listener address of the passive scanner. (Default: null)--push-pool-maxThe maximum number of concurrency when sending crawler results to the listening address. (Default: 10)--log-levelLogging levels, debug, info, warn, error and fatal. (Default: info)--no-headlessTurn off chrome headless mode to visualize the crawling process. (Default: false)

Follow me

Weibo:@9ian1i Twitter: @9ian1i

Related articles:A browser crawler practice for web vulnerability scanning

具体在这里:

具体在这里:

感觉是个bug

感觉是个bug