Cortex: horizontally scalable, highly available, multi-tenant, long term storage for Prometheus.

Cortex provides horizontally scalable, highly available, multi-tenant, long term storage for Prometheus.

- Horizontally scalable: Cortex can run across multiple machines in a cluster, exceeding the throughput and storage of a single machine. This enables you to send the metrics from multiple Prometheus servers to a single Cortex cluster and run "globally aggregated" queries across all data in a single place.

- Highly available: When run in a cluster, Cortex can replicate data between machines. This allows you to survive machine failure without gaps in your graphs.

- Multi-tenant: Cortex can isolate data and queries from multiple different independent Prometheus sources in a single cluster, allowing untrusted parties to share the same cluster.

- Long term storage: Cortex supports S3, GCS, Swift and Microsoft Azure for long term storage of metric data. This allows you to durably store data for longer than the lifetime of any single machine, and use this data for long term capacity planning.

Cortex is a CNCF incubation project used in several production systems including Weave Cloud and Grafana Cloud. Cortex is primarily used as a remote write destination for Prometheus, with a Prometheus-compatible query API.

Documentation

Read the getting started guide if you're new to the project. Before deploying Cortex with a permanent storage backend you should read:

- An overview of Cortex's architecture

- Getting started with Cortex

- Information regarding configuring Cortex

For a guide to contributing to Cortex, see the contributor guidelines.

Further reading

To learn more about Cortex, consult the following talks and articles.

Recent talks and articles

- Dec 2020 blog post "How AWS and Grafana Labs are scaling Cortex for the cloud"

- Oct 2020 blog post "How to switch Cortex from chunks to blocks storage (and why you won’t look back)"

- Oct 2020 blog post "Now GA: Cortex blocks storage for running Prometheus at scale with reduced operational complexity"

- Sep 2020 blog post "A Tale of Tail Latencies"

- Sep 2020 KubeCon talk "Scaling Prometheus: How We Got Some Thanos Into Cortex" (video, slides)

- Aug 2020 blog post "Scaling Prometheus: How we’re pushing Cortex blocks storage to its limit and beyond"

- Jul 2020 blog post "How blocks storage in Cortex reduces operational complexity for running Prometheus at massive scale"

- Jul 2020 PromCon talk "Sharing is Caring: Leveraging Open Source to Improve Cortex & Thanos" (video, slides)

- Mar 2020 blog post "Cortex: Zone Aware Replication"

- Mar 2020 blog post "How we're using gossip to improve Cortex and Loki availability"

- Jan 2020 blog post "The Future of Cortex: Into the Next Decade"

Previous talks and articles

- Nov 2019 KubeCon talks "Cortex 101: Horizontally Scalable Long Term Storage for Prometheus" (video, slides), "Configuring Cortex for Max Performance" (video, slides, write up) and "Blazin’ Fast PromQL" (slides, video, write up)

- Nov 2019 PromCon talk "Two Households, Both Alike in Dignity: Cortex and Thanos" (video, slides, write up)

- May 2019 KubeCon talks; "Cortex: Intro" (video, slides, blog post) and "Cortex: Deep Dive" (video, slides)

- Feb 2019 blog post & podcast; "Prometheus Scalability with Bryan Boreham" (podcast)

- Feb 2019 blog post; "How Aspen Mesh Runs Cortex in Production"

- Dec 2018 KubeCon talk; "Cortex: Infinitely Scalable Prometheus" (video, slides)

- Dec 2018 CNCF blog post; "Cortex: a multi-tenant, horizontally scalable Prometheus-as-a-Service"

- Nov 2018 CloudNative London meetup talk; "Cortex: Horizontally Scalable, Highly Available Prometheus" (slides)

- Nov 2018 CNCF TOC Presentation; "Horizontally Scalable, Multi-tenant Prometheus" (slides)

- Sept 2018 blog post; "What is Cortex?"

- Aug 2018 PromCon panel; "Prometheus Long-Term Storage Approaches" (video)

- Jul 2018 design doc; "Cortex Query Optimisations"

- Aug 2017 PromCon talk; "Cortex: Prometheus as a Service, One Year On" (videos, slides, write up part 1, part 2, part 3)

- Jun 2017 Prometheus London meetup talk; "Cortex: open-source, horizontally-scalable, distributed Prometheus" (video)

- Dec 2016 KubeCon talk; "Weave Cortex: Multi-tenant, horizontally scalable Prometheus as a Service" (video, slides)

- Aug 2016 PromCon talk; "Project Frankenstein: Multitenant, Scale-Out Prometheus": (video, slides)

- Jun 2016 design document; "Project Frankenstein: A Multi Tenant, Scale Out Prometheus"

Getting Help

If you have any questions about Cortex:

- Ask a question on the Cortex Slack channel. To invite yourself to the CNCF Slack, visit http://slack.cncf.io/.

- File an issue.

- Send an email to [email protected]

Your feedback is always welcome.

For security issues see https://github.com/cortexproject/cortex/security/policy

Community Meetings

The Cortex community call happens every two weeks on Thursday, alternating at 1200 UTC and 1700 UTC. To get a calendar invite join the google groups or check out the CNCF community calendar.

Meeting notes are held here.

Hosted Cortex (Prometheus as a service)

There are several commercial services where you can use Cortex on-demand:

Weave Cloud

Weave Cloud from Weaveworks lets you deploy, manage, and monitor container-based applications. Sign up at https://cloud.weave.works and follow the instructions there. Additional help can also be found in the Weave Cloud documentation.

Instrumenting Your App: Best Practices

Grafana Cloud

The Cortex project was started by Tom Wilkie (Grafana Labs' VP Product) and Julius Volz (Prometheus' co-founder) in June 2016. Employing 6 out of 8 maintainers for Cortex enables Grafana Labs to offer Cortex-as-a-service with exceptional performance and reliability. As the creators of Grafana, Loki, and Tempo, Grafana Labs can offer you the most wholistic Observability-as-a-Service stack out there.

For further information see Grafana Cloud documentation, tutorials, webinars, and KubeCon talks. Get started today and sign up here.

Amazon Managed Service for Prometheus (AMP)

Amazon Managed Service for Prometheus (AMP) is a Prometheus-compatible monitoring service that makes it easy to monitor containerized applications at scale. It is a highly available, secure, and managed monitoring for your containers. Get started here. To learn more about the AMP, reference our documentation and Getting Started with AMP blog.

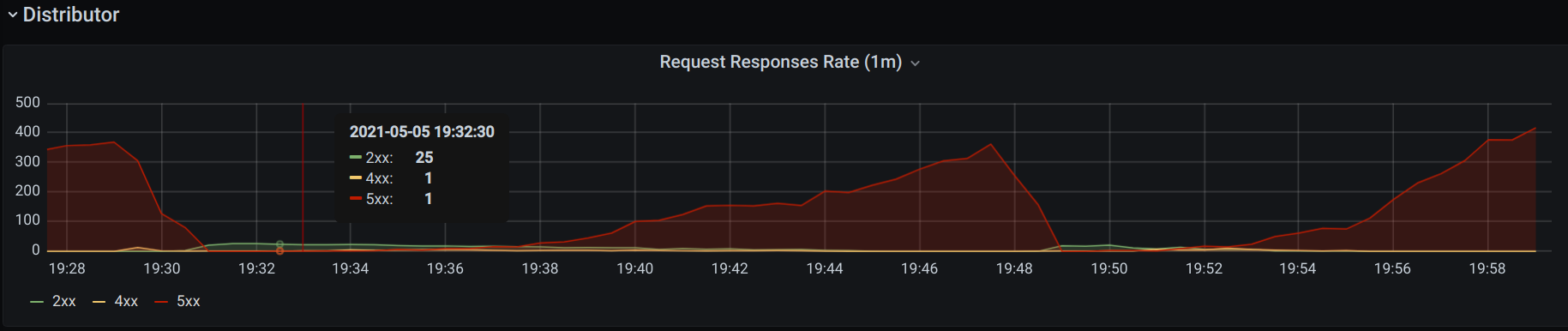

Queries for this graph look like:

Queries for this graph look like: