Sloth

Introduction

Use the easiest way to generate SLOs for Prometheus.

Sloth generates understandable, uniform and reliable Prometheus SLOs for any kind of service. Using a simple SLO spec that results in multiple metrics and multi window multi burn alerts.

At this moment Sloth is focused on Prometheus, however depending on the demand and complexity we may support more backeds.

Features

- Simple, maintainable and understandable SLO spec.

- Reliable SLO metrics and alerts.

- Based on Google SLO implementation and multi window multi burn alerts framework.

- Autogenerates Prometheus SLI recording rules in different time windows.

- Autogenerates Prometheus SLO metadata rules.

- Autogenerates Prometheus SLO multi window multi burn alert rules (Page and warning).

- SLO spec validation.

- Customization of labels, disabling different type of alerts...

- A single way (uniform) of creating SLOs across all different services and teams.

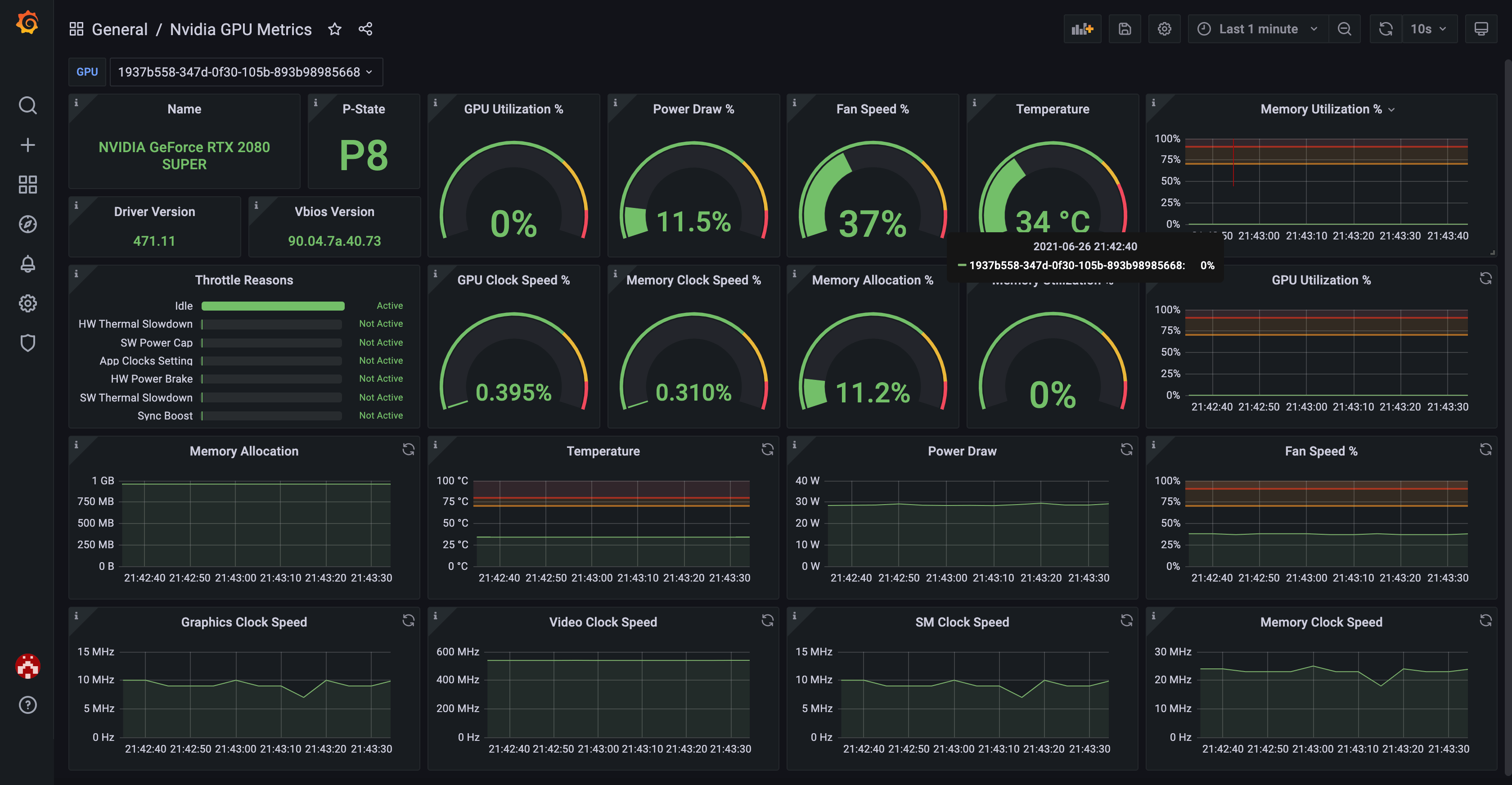

- Automatic Grafana dashboard to see all your SLOs state.

- Single binary and easy to use CLI.

- Kubernetes (Prometheus-operator) support.

Get Sloth

- Releases

- Docker images

git clone [email protected]:slok/sloth.git && cd ./sloth && make build && ls -la ./bin

Getting started

Release the Sloth!

sloth generate -i ./examples/getting-started.yml

version: "prometheus/v1"

service: "myservice"

labels:

owner: "myteam"

repo: "myorg/myservice"

tier: "2"

slos:

# We allow failing (5xx and 429) 1 request every 1000 requests (99.9%).

- name: "requests-availability"

objective: 99.9

sli:

events:

error_query: sum(rate(http_request_duration_seconds_count{job="myservice",code=~"(5..|429)"}[{{.window}}]))

total_query: sum(rate(http_request_duration_seconds_count{job="myservice"}[{{.window}}]))

alerting:

name: MyServiceAvailabilitySLO

labels:

category: "availability"

annotations:

# Overwrite default Sloth SLO alert summmary on ticket and page alerts.

summary: "High error rate on 'myservice' requests responses"

page_alert:

labels:

severity: pageteam

routing_key: myteam

ticket_alert:

labels:

severity: "slack"

slack_channel: "#alerts-myteam"

This would be the result you would obtain from the above spec example.

How does it work

At this moment Sloth uses Prometheus rules to generate SLOs. Based on the generated recording and alert rules it creates a reliable and uniform SLO implementation:

1 Sloth spec -> Sloth -> N Prometheus rules

The Prometheus rules that Sloth generates can be explained in 3 categories:

- SLIs: These rules are the base, they use the queries provided by the user to get a value used to show what is the error service level (availability). It creates multiple rules for different time windows, these different results will be used for the alerts.

- Metadata: These are used as informative metrics, like the remaining error budget, the SLO objective percent... These are very handy for SLO visualization, e.g Grafana dashboard.

- Alerts: These are the multiwindow-multiburn alerts that are based on the SLI rules.

Sloth will take the service level spec and for each SLO in the spec will create 3 rule groups with the above categories.

The generated rules share the same metric name across SLOs, however the labels are the key to identify the different services, SLO... This is how we obtain a uniform way of describing all the SLOs across different teams and services.

To get all the available metric names created by Sloth, use this query:

count({sloth_id!=""}) by (__name__)

Modes

Generator

generate will generate Prometheus rules in different formats based on the specs. This mode only needs the CLI so its very useful on Gitops, CI, scripts or as a CLI on yout toolbox.

Currently there are two types of specs supported for generate command. Sloth will detect the input spec type and generate the output type accordingly:

Raw (Prometheus)

Check spec here: v1

Will generate the prometheus recording and alerting rules in Standard Prometheus YAML format.

Kubernetes CRD (Prometheus-operator)

Check CRD here: v1

Will generate from a Sloth CRD spec into Prometheus-operator CRD rules. This generates the prometheus operator CRDs based on the Sloth CRD template.

The CRD doesn't need to be registered in any K8s cluster because it happens as a CLI (offline). A Kubernetes controller that makes this translation automatically inside the Kubernetes cluster is in the TODO list

Examples

- Alerts disabled: Simple example that shows how to disable alerts.

- K8s apiserver: Real example of SLOs for a Kubernetes Apiserver.

- Home wifi: My home Ubiquti Wifi SLOs.

- K8s Home wifi: Same as home-wifi but shows how to generate Prometheus-operator CRD from a Sloth CRD.

- Raw Home wifi: Example showing how to use

rawSLIs instead of the commoneventsusing the home-wifi example.

The resulting generated SLOs are in examples/_gen.

F.A.Q

- Why Sloth

- SLI?

- SLO?

- Error budget?

- Burn rate?

- SLO based alerting?

- What are ticket and page alerts?

- Can I disable alerts?

- Grafana dashboard?

Why Sloth

Creating Prometheus rules for SLI/SLO framework is hard, error prone and is pure toil.

Sloth abstracts this task, and we also gain:

- Read friendlyness: Easy to read and declare SLI/SLOs.

- Gitops: Easy to integrate with CI flows like validation, checks...

- Reliability and testing: Generated prometheus rules are already known that work, no need the creation of tests.

- Centralize features and error fixes: An update in Sloth would be applied to all the SLOs managed/generated with it.

- Standardize the metrics: Same conventions, automatic dashboards...

- Rollout future features for free with the same specs: e.g automatic report creation.

SLI?

Service level indicator. Is a way of quantify how your service should be responding to user.

TL;DR: What is good/bad service for your users. E.g:

- Requests >=500 considered errors.

- Requests >200ms considered errors.

- Process executions with exit code >0 considered errors.

Normally is measured using events: good/bad-events / total-events.

SLO?

Service level objective. A percent that will tell how many SLI errors your service can have in a specific period of time.

Error budget?

An error budget is the ammount of errors (driven by the SLI) you can have in a specific period of time, this is driven by the SLO.

Lets see an example:

- SLI Error: Requests status code >= 500

- Period: 30 days

- SLO: 99.9%

- Error budget: 0.0999 (100-99.9)

- Total requests in 30 days: 10000

- Available error requests: 9.99 (10000 * 0.0999 / 100)

If we have more than 9.99 request response with >=500 status code, we would be burning more error budget than the available, if we have less errors, we would end without spending all the error budget.

Burn rate?

The speed you are consuming your error budget. This is key for SLO based alerting (Sloth will create all these alerts), because depending on the speed you are consuming your error budget, it will trigger your alerts.

Speed/rate examples:

- 1: You are consuming 100% of the error budget in the expected period (e.g if 30d period, then 30 days).

- 2: You are consuming 200% of the error budget in the expected period (e.g if 30d period, then 15 days).

- 60: You are consuming 6000% of the error budget in the expected period (e.g if 30d period, then 12h hour).

- 1080: You are consuming 108000% of the error budget in the expected period (e.g if 30d period, then 40 minute).

SLO based alerting?

With SLO based alerting you will get better alerting to a regular alerting system, because:

- Alerts on symptoms (SLIs), not causes.

- Trigger at different levels (warning/ticket and critical/page).

- Takes into account time and quantity, this is: speed of errors and number of errors on specific time.

The result of these is:

- Correct time to trigger alerts (important == fast, not so important == slow).

- Reduce alert fatigue.

- Reduce false positives and negatives.

What are ticket and page alerts?

MWMB type alerting is based on two kinds of alerts, ticket and page:

page: Are critical alerts that normally are used to wake up, notify on important channels, trigger oncall...ticket: The warning alerts that normally open tickets, post messages on non-important Slack channels...

These are triggered in different ways, page alerts are triggered faster but require faster error budget burn rate, on the other side, ticket alerts are triggered slower and require a lower and constant error budget burn rate.

Can I disable alerts?

Yes, use disable: true on page and ticket.

Grafana dashboard?

Check grafana-dashboard, this dashboard will load the SLOs automatically.