Prometheus Common Data Exporter

Prometheus Common Data Exporter 用于将多种来源(如http响应报文、本地文件、TCP响应报文、UDP响应报文)的Json、xml、yaml或其它格式的数据,解析为Prometheus metric数据。

Prometheus Common Data Exporter is used to parse JSON, XML, yaml or other format data from multiple sources (such as HTTP response message, local file, TCP response message and UDP response message) into Prometheus metric data.

编译

通用

make

编译Docker镜像

make && docker build -t data_exporter:0.2.0 .

运行

常规启动

./data_exporter --config.file="data_exporter.yaml"

调试配置文件

./data_exporter --config.file="data_exporter.yaml" --log.level=debug

启动examples

cd examples

nohup python3 -m http.server -b 127.0.0.1 10101 & # 启动一个http后台服务, 测试结束记得停止

../data_exporter

# 新窗口执行

curl 127.0.0.1:9116/metrics

使用Docker运行

docker run --rm -d -p 9116:9116 --name data_exporter -v `pwd`:/etc/data_exporter/ microops/data_exporter:0.2.0 --config.file=/etc/data_exporter/config.yml

配置

collects:

- name: "test-http"

relabel_configs: [ ]

data_format: "json" # 原数据格式/数据匹配模式

datasource:

- type: "file"

url: "../examples/my_data.json"

- type: "http"

url: "https://localhost/examples/my_data.json"

relabel_configs: [ ]

metrics: # metric 匹配规则

- name: "Point1"

relabel_configs: # 根据匹配到数据及标签,进行二次处理,和Prometheus的relabel_configs用法一致

- source_labels: [ __name__ ]

target_label: name

regex: "([^.]+)\\.metrics\\..+"

replacement: "$1"

action: replace

- source_labels: [ __name__ ]

target_label: __name__

regex: "[^.]+\\.metrics\\.(.+)"

replacement: "server_$1"

action: replace

match: # 匹配规则

datapoint: "data|@expand|@expand|@to_entries:name:value" # 数据块匹配,每一个数据块就是一个指标的原始数据

labels: # 标签匹配

__value__: "value"

__name__: "name"

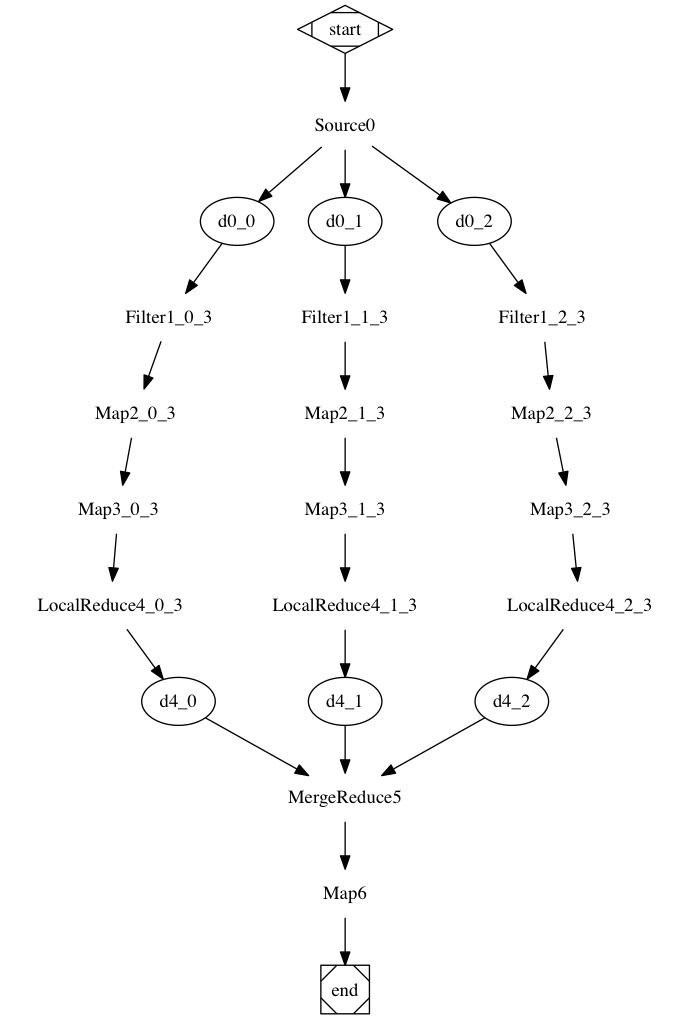

流程

数据源

file

datasource: - type: "file" name:# 数据源名称 max_content_length: # 读取最大长度,单位为字节,默认为102400000 relabel_configs: [ , ... ] # 参考https://prometheus.io/docs/prometheus/latest/configuration/configuration/#relabel_config timeout:# 默认为30s,不能小于1ms,参考https://prometheus.io/docs/prometheus/latest/configuration/configuration/#duration read_mode: # 读取模式,stream-line|full-text,默认为full-text url: "../examples/weather.xml"

http

datasource: - type: "http" name:# 数据源名称 max_content_length: # 读取最大长度,单位为字节,默认为102400000 relabel_configs: [ , ... ] # 参考https://prometheus.io/docs/prometheus/latest/configuration/configuration/#relabel_config timeout:# 默认为30s,不能小于1ms,参考https://prometheus.io/docs/prometheus/latest/configuration/configuration/#duration read_mode: # 读取模式,stream-line|full-text,默认为full-text url: "http://127.0.0.1:2001/weather.xml" http: # HTTP basic 认证信息 basic_auth: username: password: password_file: # `Authorization` 头配置 authorization: type: # 类型,默认为 Bearer credentials: credentials_file: oauth2: # oauth2配置,参考文档: https://prometheus.io/docs/prometheus/latest/configuration/configuration/#oauth2 proxy_url: # 代理地址 follow_redirects: # 是否跟随重定向,默认为true tls_config: # TLS配置 参考文档: https://prometheus.io/docs/prometheus/latest/configuration/configuration/#tls_config body: string # HTTP请求报文 headers: { : # 自定义HTTP头 method:, ... } #HTTP请求方法 GET/POST/PUT... valid_status_codes: [ ,... ] # 有效的状态码,默认为200~299

tcp

datasource: - type: "tcp" name:# 数据源名称 max_content_length: # 读取最大长度,单位为字节,默认为102400000 relabel_configs: [ , ... ] # 参考https://prometheus.io/docs/prometheus/latest/configuration/configuration/#relabel_config timeout:# 默认为30s,不能小于1ms,参考https://prometheus.io/docs/prometheus/latest/configuration/configuration/#duration read_mode: # 读取模式,stream-line|full-text,默认为full-text url: "127.0.0.1:2001" tcp: tls_config: # TLS配置 参考文档: https://prometheus.io/docs/prometheus/latest/configuration/configuration/#tls_config send: # send的值类型可以为 string、[string,...]、{"msg": ,"delay": - msg:}、[{"msg": ,"delay": },...] # 发送消息 delay: # 发送后等待时间,默认为0,延迟总和不得大于timeout,参考https://prometheus.io/docs/prometheus/latest/configuration/configuration/#duration max_connect_time: # 最大建立连接的时长(不包含数据传输),如果超过该时间连接仍未建立成功,会返回失败。默认为3秒 max_transfer_time: # 报文传输最大时长,报文传输超过该时长,会停止继续读取并关闭连接。 end_of: # 报文结束标志,当读取到该标志,则会停止继续读取并关闭连接。报文为行缓冲,所以end_of的值不能为多行。

注:end_of和max_transfer_time用来控制关闭连接(报文传输完成)。当匹配到end_of的标志,或传输时间达到max_transfer_time的值,会关闭连接,停止接收数据,但不会抛出异常。 建议主要使用end_of来控制,并增大max_transfer_time的值。

udp

datasource: - type: "udp" name:# 数据源名称 max_content_length: # 读取最大长度,单位为字节,默认为102400000 relabel_configs: [ , ... ] # 参考https://prometheus.io/docs/prometheus/latest/configuration/configuration/#relabel_config timeout:# 默认为30s,不能小于1ms,参考https://prometheus.io/docs/prometheus/latest/configuration/configuration/#duration read_mode: # 读取模式,stream-line|full-text,默认为full-text url: "127.0.0.1:2001" udp: send: # send的值类型可以为 string、[string,...]、{"msg": ,"delay": - msg:}、[{"msg": ,"delay": },...] # 发送消息 delay: # 发送后等待时间,默认为0,延迟总和不得大于timeout,参考https://prometheus.io/docs/prometheus/latest/configuration/configuration/#duration max_connect_time: # 最大建立连接的时长(不包含数据传输),如果超过该时间连接仍未建立成功,会返回失败。默认为3秒 max_transfer_time: # 报文传输最大时长,报文传输超过该时长,会停止继续读取并关闭连接。 end_of: # 报文结束标志,当读取到该标志,则会停止继续读取并关闭连接。报文为行缓冲,所以end_of的值不能为多行。

注: udp暂不支持TLS

Labels说明

总体遵循prometheus的规范, 但包含几个额外的特殊的label:

__namespace__、__subsystem__、__name____namespace__、__subsystem__的值为可选项__name__的值为必选项__namespace__、__subsystem__、__name__使用下划线进行连接,组成metric的fqDN(metric name)

__value__: 必选, metric值__time_format__、__time____time_format__的值为可选项__time__的值为可选项,如果只为空或未匹配到时间戳,则对应的metric数据不会携带时间__time__的值为unix(秒、毫秒或纳秒)时间戳(字符串)时,不需要指定__time_format____time__的值为 RFC3339Nano(兼容RFC3339)格式的时间字符串时,不需要指定__time_format____time__的值为其它格式的时间字符串时,需要指定__time_format__(参考 go源代码 )

__help__: 可选,Metric帮助信息

relabel_configs

参考Prometheus官方文档 relabel_config

Metric匹配语法

- datapoint: 数据点/块匹配,每一个数据点/块就是一个指标的原始数据

- 如果值为空,则匹配全部数据

- labels: map类型,key为label key, value为匹配到的label value,如果有多个结果,只会获取第一个结果

数据匹配模式

json

示例

- 数据

{

"code": 0,

"data": {

"server1": {

"metrics": {

"CPU": "16",

"Memory": 68719476736

}

},

"server2": {

"metrics": {

"CPU": "8",

"Memory": 34359738368

}

}

}

}

- 配置

match: # 匹配规则

datapoint: "data|@expand|@expand|@to_entries:name:value"

labels:

__value__: "value"

__name__: "name"

说明

- 总体遵循 gjson 语法

- 增加 modifiers: expand

- 将map展开一层,具体说明见下文

- 增加 modifiers: to_entries

- 将map转换为array,具体说明见下文

expand

原始数据:

{

"server1": {

"metrics": {

"CPU": "16",

"Memory": 68719476736

}

},

"server2": {

"metrics": {

"CPU": "8",

"Memory": 34359738368

}

}

}

使用@expand展开后数据:

{

"server1.metrics": {

"CPU": "16",

"Memory": 68719476736

},

"server2.metrics": {

"CPU": "8",

"Memory": 34359738368

}

}

to_entries

原始数据:

{

"server1": {

"metrics": {

"CPU": "16",

"Memory": 68719476736

}

},

"server2": {

"metrics": {

"CPU": "8",

"Memory": 34359738368

}

}

}

使用@to_entries展开后数据:

[

{

"key": "server1",

"value": {

"metrics": {

"CPU": "16",

"Memory": 68719476736

}

}

},

{

"key": "server2",

"value": {

"metrics": {

"CPU": "8",

"Memory": 34359738368

}

}

}

]

使用@to_entries:name:val展开后数据:

[

{

"name": "server1",

"val": {

"metrics": {

"CPU": "16",

"Memory": 68719476736

}

}

},

{

"name": "server2",

"val": {

"metrics": {

"CPU": "8",

"Memory": 34359738368

}

}

}

]

使用@to_entries:-:val展开后数据:

[

{

"metrics": {

"CPU": "16",

"Memory": 68719476736

}

},

{

"metrics": {

"CPU": "8",

"Memory": 34359738368

}

}

]

使用@to_entries::-展开后数据:

[

"server1",

"server2"

]

yaml

内部会将yaml转换为json,再进行处理,请参考json部分

xml

基于 etree库 进行xml解析,

- 配置:

- name: "weather - week"

match:

datapoint: "//china[@dn='week']/city/weather"

labels:

__value__: "{{ .Text }}"

name: '{{ ((.FindElement "../").SelectAttr "quName").Value }}'

__name__: "week"

path: "{{ .GetPath }}"

- 配置说明

datapoint: 使用 etree.Element.FindElements 进行文档查找,labels: 使用go template语法,进行数据解析,元数据为 etree.Element 对象

regex

Perl语法的正则表达式匹配

- name: "server cpu"

relabel_configs:

- source_labels: [ __raw__ ]

target_label: __value__

regex: ".*cpu=(.+?)[!/].*"

- source_labels: [ __raw__ ]

target_label: name

regex: ".*@\\[(.+?)].*"

- target_label: __name__

replacement: "cpu"

match:

datapoint: "@.*!"

labels:

__raw__: ".*"

- 如果想跨行匹配,需要使用

(?s:.+)这种方式,标记s为让.支持换行(\n)

命名分组匹配

- name: regex - memory

relabel_configs:

- target_label: __name__

replacement: memory

match:

datapoint: '@\[(?P

.+?)].*/ts=(?P<__time__>[0-9]+)/.*!

'

labels:

__value__: memory=(?P<__value__>[\d]+)

- labels使用命名匹配时,需要名称和label名称一致,否则会匹配到整个结果